Kaizen #131 - Bulk Write for parent-child records using Scala SDK

Hello and welcome back to this week's Kaizen!

Last week, we discussed how to configure and initialize the Zoho CRM Scala SDK. This week, we will be exploring the Bulk Write API and its capabilities. Specifically, we will focus on executing bulk write operations for parent-child records in a single operation, and how to do this using Scala SDK.

Quick Recap of Bulk Write API

Bulk Write API facilitates efficient insertion, updation, or upsertion of large datasets into your CRM account. It operates asynchronously, scheduling jobs to handle data operations. Upon completion, notifications are sent to the specified callback URL or the job status can be checked periodically.

When to use Bulk Write API?

- When scheduling a job to import a massive volume of data.

- When needing to process more than 100 records in a single API call.

- When conducting background processes like migration or initial data sync between Zoho CRM and external services.

Steps to Use Bulk Write API:

- Prepare CSV File: Create a CSV file with field API names as the first row and data in subsequent rows.

- Upload Zip File: Compress the CSV file into a zip format and upload it via a POST request.

- Create Bulk Write Job: Use the uploaded file ID, callback URL, and field API names to create a bulk write job for insertion, update, or upsert operations.

- Check Job Status: Monitor job status through polling or callback methods. Status could be ADDED, INPROGRESS, or COMPLETED.

- Download Result: Retrieve the result of the bulk write job, typically a CSV file with job details, using the provided download URL.

In our previous Kaizen posts - Bulk Write API Part I and Part II, we have extensively covered the Bulk Write API, complete with examples and sample codes for the PHP SDK. We highly recommend referring to those posts before reading further to gain a better understanding of the Bulk Write API.

With the release of our V6 APIs, we have introduced a significant enhancement to our Bulk Write API functionality. Previously, performing bulk write operations required separate API calls for parent and each child module. But with this enhancement, you can now import them all in a single, operation or API call.

Field Mappings for parent-child records in a single API call

When configuring field mappings for bulk write operations involving parent-child records in a single API call, there are two key aspects to consider: creating the CSV file containing the data and constructing the input JSON for the bulk write job.

Creating the data CSV file:

To set up the data for a bulk write operation involving parent-child records, you need to prepare separate CSV files - one for the parent module records, and one each for each child module records. In these CSV files, appropriate field mappings for both parent and child records need to be defined.

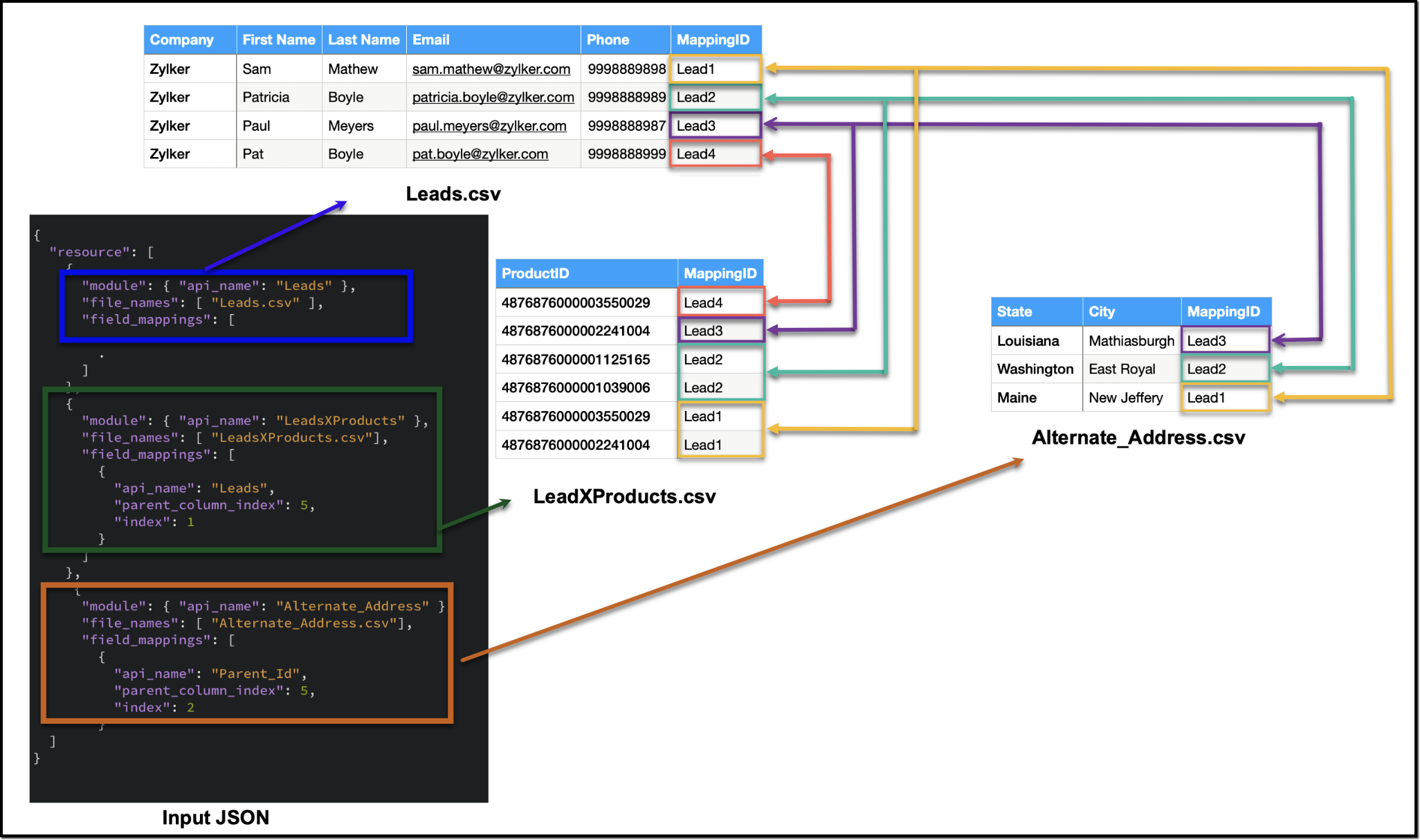

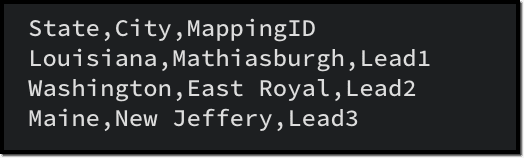

The parent CSV file will contain the parent records, while the child CSV file will contain the child records. To make sure that each child record is linked to its respective parent record, we will add an extra column (MappingID in the image below) to both the parent and child CSV files. This column will have a unique identifier value for each parent record. For each record in the child CSV file, the value in the identifier column should match the value of the identifier of the parent record in the parent CSV file. This ensures an accurate relationship between the parent and child records during the bulk write operation.

Please be aware that the mapping of values is solely dependent on the mappings defined in the input JSON. In this case, the column names in the CSV file serve only as a reference for you. Please refer to the notes section towards the end of this document for more details.

Creating the CSV file remains consistent across all types of child records, and we have already discussed how each child record is linked to its respective parent record in the CSV file. To facilitate the same linkage in the input JSON, we have introduced a new key called parent_column_index. This key assists us in specifying which column in the child module's CSV file contains the identifier or index linking it to the parent record. In the upcoming sections, we will explore preparing the input JSON for various types of child records.

Additionally, since we have multiple CSV files in the zip file, we have introduced another new key named file_names in resources array. file_names helps in correctly mapping each CSV file to its corresponding module.

Ensure that when adding parent and child records in a single operation, the parent module details should be listed first, followed by the child module details in the resource array of the input body.

1. Multiselect Lookup Fields

In scenarios involving multiselect lookup fields, the Bulk Write API now allows for the import of both parent and child records in a single operation.

In the context of multiselect lookup fields, the parent module refers to the primary module where the multiselect lookup field is added. For instance, in our example, consider a multiselect lookup field in the Leads module linking to the Products module.

Parent Module : Leads

Child module : The linking module that establishes the relationship between the parent module and the related records (LeadsXProducts)

Here are the sample files for the "LeadsXProducts" case:

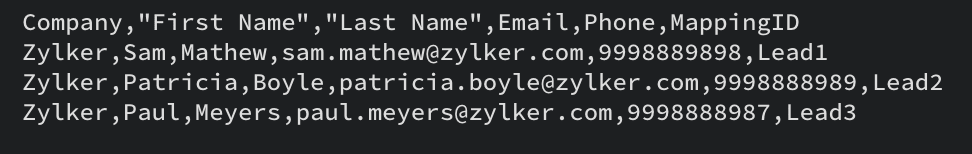

Leads.csv (Parent)

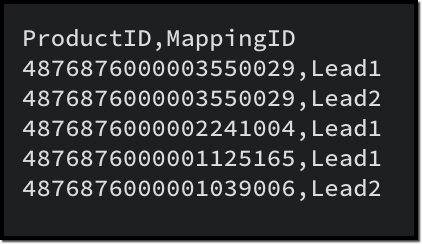

LeadsXProducts.csv (Child)

Given below is a sample input JSON for this bulk write job. Please note that the index of the child linking column should be mapped under the key index, and the index of the parent column index should be mapped under parent_column_index.

To map the child records to their corresponding parent records (linking module), you must use the field API name of the lookup field that links to the parent module. For example, in this case, the API name of the lookup field linking to the Leads module from the LeadsXProducts is Leads.

{ "operation": "insert", "ignore_empty": true, "callback": { "url": "http://www.zoho.com", "method": "post" }, "resource": [ { "type": "data", "module": { "api_name": "Leads" //parent module API name }, "file_id": "4876876000006855001", "file_names": [ "Leads.csv" //parent records CSV file ], "field_mappings": [ // field mappings for the parent record fields { "api_name": "Company", //field API name "index": 0 //index in the CSV file }, { "api_name": "First_Name", "index": 1 }, { "api_name": "Last_Name", "index": 2 }, { "api_name": "Email", "index": 3 }, { "api_name": "Phone", "index": 4 } ] }, { "type": "data", "module": { "api_name": "LeadsXProducts" //child module API name }, "file_id": "4876876000006855001", "file_names": [ "LeadsXProducts.csv" //child records CSV file ], "field_mappings": [ { "api_name": "Products", "find_by": "id", "index": 0 }, { "api_name": "Leads", //field API name of the lookup field in the Linking Module "parent_column_index": 5, // the index of the identifier column in the parent CSV file "index": 1 //index of the identifier column in the child CSV file } ] } ] } |

The following is a sample code snippet for the Scala SDK, to achieve the same functionality. Find the complete code here.

var module = new MinifiedModule() // Create a new instance of MinifiedModule module.setAPIName(Option("Leads")) // Set the API name for the module to "Leads" resourceIns.setModule(Option(module)) resourceIns.setFileId(Option("4876876000006899001")) // Set the file ID for the resource instance resourceIns.setIgnoreEmpty(Option(true)) var filenames = new ArrayBuffer[String] // Create a new ArrayBuffer to store file names filenames.addOne("Leads.csv") resourceIns.setFileNames(filenames) // Set the file names for the resource instance // Create a new ArrayBuffer to store field mappings var fieldMappings: ArrayBuffer[FieldMapping] = new ArrayBuffer[FieldMapping] // Create a new FieldMapping instance for each field var fieldMapping: FieldMapping = null fieldMapping = new FieldMapping fieldMapping.setAPIName(Option("Company")) fieldMapping.setIndex(Option(0)) fieldMappings.addOne(fieldMapping) . . // Set the field mappings for the resource instance resourceIns.setFieldMappings(fieldMappings) resource.addOne(resourceIns) requestWrapper.setResource(resource) resourceIns = new Resource resourceIns.setType(new Choice[String]("data")) module = new MinifiedModule() module.setAPIName(Option("LeadsXProducts")) resourceIns.setModule(Option(module)) resourceIns.setFileId(Option("4876876000006899001")) resourceIns.setIgnoreEmpty(Option(true)) filenames = new ArrayBuffer[String] filenames.addOne("LeadsXProducts.csv") resourceIns.setFileNames(filenames) fieldMappings = new ArrayBuffer[FieldMapping] fieldMapping = new FieldMapping fieldMapping.setAPIName(Option("Products")) fieldMapping.setFindBy(Option("id")) fieldMapping.setIndex(Option(0)) fieldMappings.addOne(fieldMapping) fieldMapping = new FieldMapping fieldMapping.setAPIName(Option("Leads")) //Specify the API name of the lookup filed in the Linking Module fieldMapping.setParentColumnIndex(Option(5)) //Specify the index of the identifier column in the parent CSV file fieldMapping.setIndex(Option(1)) //Specify the index of the identifier column in the child CSV file fieldMappings.addOne(fieldMapping) resourceIns.setFieldMappings(fieldMappings) resource.addOne(resourceIns) requestWrapper.setResource(resource) |

2. Multi-User Lookup fields

In case of multi-user lookup fields, the parent module remains the module where the multi-user field is added. The child module is the lookup module created to facilitate this relationship.

For instance, let's consider a scenario where a multi-user field labeled Referred By is added in the Leads module, linking to the Users module.

Parent module : Leads

Child module : The linking module, LeadsXUsers.

To get more information about the child module, please utilize the Get Modules API. You can get the details of the fields within the child module using the Fields API.

Here is a sample CSV for adding a multi-user field records along with the parent records:

LeadsXUsers.csv

Please ensure that you create a zip file containing the corresponding CSV files, upload it to the platform and then initiate the bulk write job using the file ID. The values for index and parent_column_index will vary based on your specific CSV files.

To create a bulk write job using Create Bulk Write job API, add the following code snippet to your resource array.

{ "type": "data", "module": { "api_name": "Leads_X_Users" // child module }, "file_id": "4876876000006887001", "file_names": [ "LeadsXUsers.csv" //child records CSV file name ], "field_mappings": [ { "api_name": "Referred_User", "find_by": "id", "index": 0 }, { "api_name": "userlookup221_11", //API name of the Leads lookup field in LeadsXUsers module "parent_column_index": 5, // the index of the identifier column in the parent CSV file "index": 1 // the index of the identifier column in the child CSV file } ] } |

To do the same using Scala SDK, add the following code snippet to your code:

resourceIns = new Resource resourceIns.setType(new Choice[String]("data")) module = new MinifiedModule() module.setAPIName(Option("Leads_X_Users")) resourceIns.setModule(Option(module)) resourceIns.setFileId(Option("4876876000006904001")) resourceIns.setIgnoreEmpty(Option(true)) filenames = new ArrayBuffer[String] filenames.addOne("LeadsXUsers.csv") resourceIns.setFileNames(filenames) fieldMappings = new ArrayBuffer[FieldMapping] fieldMapping = new FieldMapping fieldMapping.setAPIName(Option("Referred_User")) fieldMapping.setFindBy(Option("id")) fieldMapping.setIndex(Option(0)) fieldMappings.addOne(fieldMapping) fieldMapping = new FieldMapping fieldMapping.setAPIName(Option("userlookup221_11")) fieldMapping.setParentColumnIndex(Option(5)) fieldMapping.setIndex(Option(1)) fieldMappings.addOne(fieldMapping) resourceIns.setFieldMappings(fieldMappings) resource.addOne(resourceIns) requestWrapper.setResource(resource) |

3. Subform data

To import subform data along with parent records in a single operation, you must include both the parent and subform CSV files within a zip file and upload it. In this context, the parent module refers to the module where the subform is added, and the child module is the subform module.

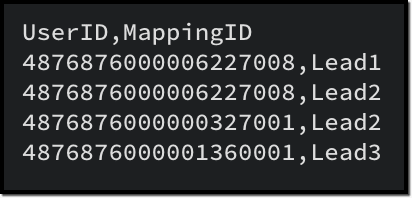

For instance, consider a subform named Alternate Address in the Leads module, with fields such as City and State.

Parent module : Leads

Child module : Alternate_Address (api name of the Subform module).

In the subform CSV file (Alternate_Address.csv), in addition to the data columns, include a column to denote the linkage to the parent record.

Once the zip file containing both the parent and subform CSV files is prepared, proceed to upload it to initiate the import process. When you create the bulk write job, ensure to specify the appropriate values for index and parent_column_index based on your specific CSV files in the input.

Here is a sample CSV for the subform data, corresponding to the parent CSV provided earlier.

Alternate_Address.csv

To create a bulk write job using Create Bulk Write job API to import the subform data, add the following code snippet to your resource array.

{ "type": "data", "module": { "api_name": "Alternate_Address" //Subform module API name }, "file_id": "4876876000006915001", "file_names": [ "Alternate_Address.csv" //child (subform) records CSV ], "field_mappings": [ { "api_name": "State", "index": 0 }, { "api_name": "City", "index": 1 }, { "api_name": "Parent_Id", //Leads lookup field in the subform module "parent_column_index": 5, "index": 2 } ] } |

To do the same using Scala SDK, add the following code snippet to your code:

resourceIns = new Resource resourceIns.setType(new Choice[String]("data")) module = new MinifiedModule() module.setAPIName(Option("Alternate_Address")) resourceIns.setModule(Option(module)) resourceIns.setFileId(Option("4876876000006920001")) resourceIns.setIgnoreEmpty(Option(true)) filenames = new ArrayBuffer[String] filenames.addOne("Alternate_Address.csv") resourceIns.setFileNames(filenames) fieldMappings = new ArrayBuffer[FieldMapping] fieldMapping = new FieldMapping fieldMapping.setAPIName(Option("State")) fieldMapping.setIndex(Option(0)) fieldMappings.addOne(fieldMapping) fieldMapping = new FieldMapping fieldMapping.setAPIName(Option("City")) fieldMapping.setIndex(Option(1)) fieldMappings.addOne(fieldMapping) fieldMapping = new FieldMapping fieldMapping.setAPIName(Option("Parent_Id")) fieldMapping.setParentColumnIndex(Option(5)) fieldMapping.setIndex(Option(2)) fieldMappings.addOne(fieldMapping) resourceIns.setFieldMappings(fieldMappings) resource.addOne(resourceIns) requestWrapper.setResource(resource) |

4. Line Items

To import line items along with the parent records, an approach similar to handling subform data is used. The parent module is the module housing the parent records, while the child module corresponds to the line item field.

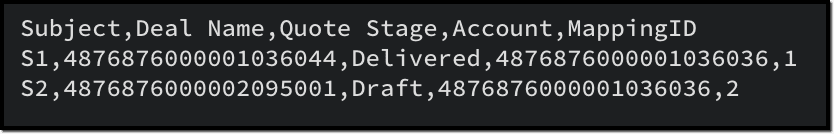

For instance, in the Quotes module, to import product details within the record, the child module should be Quoted_Items.

Here is a sample CSV for importing the parent records to the Quotes module:

Quotes.csv

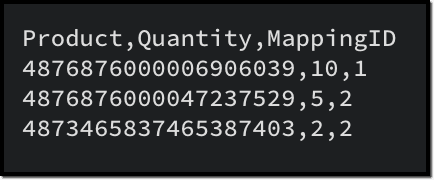

Given below is a sample CSV to add the product details in Quoted Items:

Quoted_Items.csv

Now to create a bulk write job for these records, here is a sample input JSON:

{ "operation": "insert", "ignore_empty": true, "callback": { "url": "http://www.zoho.com", "method": "post" }, "resource": [ { "type": "data", "module": { "api_name": "Quotes" }, "file_id": "4876876000006949001", "file_names": [ "Quotes.csv" ], "field_mappings": [ { "api_name": "Subject", "index": 0 }, { "api_name": "Deal_Name", "find_by" : "id", "index": 1 }, { "api_name": "Quote_Stage", "index": 2 }, { "api_name": "Account_Name", "find_by" : "id", "index": 3 } ] }, { "type": "data", "module": { "api_name": "Quoted_Items" }, "file_id": "4876876000006949001", "file_names": [ "Quoted_Items.csv" ], "field_mappings": [ { "api_name": "Product_Name", "find_by" : "id", "index": 0 }, { "api_name": "Quantity", "index": 1 }, { "api_name": "Parent_Id", "parent_column_index": 4, "index": 2 } ] } ] } |

To do the same using Scala SDK, add this code snippet to your file:

val bulkWriteOperations = new BulkWriteOperations val requestWrapper = new RequestWrapper val callback = new CallBack callback.setUrl(Option("https://www.example.com/callback")) callback.setMethod(new Choice[String]("post")) requestWrapper.setCallback(Option(callback)) requestWrapper.setCharacterEncoding(Option("UTF-8")) requestWrapper.setOperation(new Choice[String]("insert")) requestWrapper.setIgnoreEmpty(Option(true)) val resource = new ArrayBuffer[Resource] var resourceIns = new Resource resourceIns.setType(new Choice[String]("data")) var module = new MinifiedModule() module.setAPIName(Option("Quotes")) resourceIns.setModule(Option(module)) resourceIns.setFileId(Option("4876876000006953001")) resourceIns.setIgnoreEmpty(Option(true)) var filenames = new ArrayBuffer[String] filenames.addOne("Quotes.csv") resourceIns.setFileNames(filenames) var fieldMappings: ArrayBuffer[FieldMapping] = new ArrayBuffer[FieldMapping] var fieldMapping: FieldMapping = null fieldMapping = new FieldMapping fieldMapping.setAPIName(Option("Subject")) fieldMapping.setIndex(Option(0)) fieldMappings.addOne(fieldMapping) fieldMapping = new FieldMapping fieldMapping.setAPIName(Option("Deal_Name")) fieldMapping.setFindBy(Option("id")) fieldMapping.setIndex(Option(1)) fieldMappings.addOne(fieldMapping) fieldMapping = new FieldMapping fieldMapping.setAPIName(Option("Quote_Stage")) fieldMapping.setIndex(Option(2)) fieldMappings.addOne(fieldMapping) fieldMapping = new FieldMapping fieldMapping.setAPIName(Option("Account_Name")) fieldMapping.setIndex(Option(3)) fieldMapping.setFindBy(Option("id")) fieldMappings.addOne(fieldMapping) resourceIns.setFieldMappings(fieldMappings) resource.addOne(resourceIns) requestWrapper.setResource(resource) resourceIns = new Resource resourceIns.setType(new Choice[String]("data")) module = new MinifiedModule() module.setAPIName(Option("Quoted_Items")) resourceIns.setModule(Option(module)) resourceIns.setFileId(Option("4876876000006953001")) resourceIns.setIgnoreEmpty(Option(true)) filenames = new ArrayBuffer[String] filenames.addOne("Quoted_Items.csv") resourceIns.setFileNames(filenames) fieldMappings = new ArrayBuffer[FieldMapping] fieldMapping = new FieldMapping fieldMapping.setAPIName(Option("Product_Name")) fieldMapping.setFindBy(Option("id")) fieldMapping.setIndex(Option(0)) fieldMappings.addOne(fieldMapping) fieldMapping = new FieldMapping fieldMapping.setAPIName(Option("Quantity")) fieldMapping.setIndex(Option(1)) fieldMappings.addOne(fieldMapping) fieldMapping = new FieldMapping fieldMapping.setAPIName(Option("Parent_Id")) fieldMapping.setParentColumnIndex(Option(4)) fieldMapping.setIndex(Option(2)) fieldMappings.addOne(fieldMapping) resourceIns.setFieldMappings(fieldMappings) resource.addOne(resourceIns) requestWrapper.setResource(resource) |

Notes :

- When importing a single CSV file (parent or child module records separately), field_mappings is an optional key in the resource array. If you skip this key, the field mappings must be defined using the column names in the CSV file. In such cases, the column names should correspond to the field API names. Additionally, all columns should be mapped with the correct API names, and there should not be any extra unmapped columns.

- When importing parent and child records in a single API call, field_mappings is a mandatory key.

- The identifier column in the parent and child CSV can have different column names, as the mapping is done based on the input JSON.

Points to remember

- An uploaded file can be used for a single bulk write job only. If you want to retry the operation with the same data, upload the file again to generate a new file ID.

- When adding parent and child records in a single operation, ensure that the parent module comes first, followed by the child module details in the resource array of the input body.

- The parent and all child CSV files should be zipped into a single file and uploaded. You cannot use more than one zip file in a single bulk write job.

- Define appropriate mappings for both parent and child records using the parent_column_index and index key to establish the relationship.

- Utilize the resources > file_names key to map the correct CSV with the appropriate module

- For each parent in the parent records file:

- By default, the limit for Subforms and Line Items is set to 200. While you can configure this limit for subforms in the UI, customization options are not available for Line Items.

- MultiSelect Lookup fields have a maximum limit of 100. If you have more than 100 associations for a MultiSelect Lookup field, you may schedule additional bulk write jobs for the child records alone, importing 100 records at a time.

- The maximum limit for Multi-User Lookup fields is restricted to 10.

We hope that you found this post useful, and you have gained some insights into using the Bulk Write API effectively. If you have any queries, let us know in the comments below, or feel free to send an email to support@zohocrm.com. We would love to hear from you!

Recommended Reads :

- Check out our Kaizen Collection here

- Previous Kaizen : Zoho CRM Scala SDK (V6) - Configuration and Initialization

- Create a Bulk Write Job

- Kaizen #103 - Bulk Write API using PHP SDK (v5) - Part I

- Kaizen #104 - Bulk Write API using PHP SDK (v5) - Part II

Topic Participants

Anu Abraham

Andrea Dalseno

Calum Beck

Jeganprabhu S

Sticky Posts

Kaizen #152 - Client Script Support for the new Canvas Record Forms

Hello everyone! Have you ever wanted to trigger actions on click of a canvas button, icon, or text mandatory forms in Create/Edit and Clone Pages? Have you ever wanted to control how elements behave on the new Canvas Record Forms? This can be achievedKaizen #142: How to Navigate to Another Page in Zoho CRM using Client Script

Hello everyone! Welcome back to another exciting Kaizen post. In this post, let us see how you can you navigate to different Pages using Client Script. In this Kaizen post, Need to Navigate to different Pages Client Script ZDKs related to navigation A.Kaizen #210 - Answering your Questions | Event Management System using ZDK CLI

Hello Everyone, Welcome back to yet another post in the Kaizen Series! As you already may know, for the Kaizen #200 milestone, we asked for your feedback and many of you suggested topics for us to discuss. We have been writing on these topics over theKaizen #197: Frequently Asked Questions on GraphQL APIs

🎊 Nearing 200th Kaizen Post – We want to hear from you! Do you have any questions, suggestions, or topics you would like us to cover in future posts? Your insights and suggestions help us shape future content and make this series better for everyone.Kaizen #198: Using Client Script for Custom Validation in Blueprint

Nearing 200th Kaizen Post – 1 More to the Big Two-Oh-Oh! Do you have any questions, suggestions, or topics you would like us to cover in future posts? Your insights and suggestions help us shape future content and make this series better for everyone.

Recent Topics

Error: Invalid login: 535 Authentication Failed

I have used zoho with nodemailer. const transporter = nodemailer.createTransport({ host: 'smtp.zoho.com', port: 465, secure: true, auth: { user: 'example@example.com', pass: 'password' } }); While sending the mail, it shows the following error: Error:Zoho Renewal

Hello, If I am not going for zoho email renewal. will i get back my free zoho account? and if yes then is it possible to get back my all free user. how many user get back 10 or 25?Not reciving emails

Apparently i cannot recive emails on my adress contact@sportperformance.ro I can send, but do not recive. The mail i'm trying to send from mybother adress gets sent and doesn't bounce back... but still doesn't get in my inbox. Please adviseNot receiving MailChimp verification e-mail

It seems that their verification e-mails are blocked. I can receive their other e-mails, but not their verification of domain ownership e-mail. I've checked and double checked how I typed the e-mail, using different e-mails (my personal e-mail can receive it), white listing the domain and all that is left is for the IP's to be white listed, but I don't have that power. If a staff member could take a look at this -> http://mailchimp.com/about/ips/ And perhaps white list them for me, that would beCreating my 2nd email account

After creating my first email address, I decided to get another email address. I would like to use this new address as the primary address too. I don't know how to set it up there doesn't seem to be an option for thatCannot - create more email account - Unusual activity detected from this IP. Please try again after some time

Hello, I come across the error message in Control Panel. Unusual activity detected from this IP. Please try again after some time and i cannot create any more users We are an IT company and we provide service for another company Please unlock us."Unable to send message;Reason:553 Relaying disallowed. Invalid Domain"

Good day. When I try to send mail through ZOHO mail I get the following error : "Unable to send message;Reason:553 Relaying disallowed. Invalid Domain" I need help with this. My zohomail is : @eclipseweb.site Thank you,Transfert de domaine pour création des comptes emails

Bonjour , je ne parviens point à créer des mails avec le domaine 'raeses.org' suite à la souscription du domaine auprès d'un autre hébergeur, dont j'ai fait la demande du code de transfert qui est le suivant : J2[U8-l0]p8[ En somme, attente de l'activationHelp! Unable to send message;Reason:554 5.1.8 Email Outgoing Blocked.

Kindly help me resolved this issue that i am facing here.How are people handling estimates with Zoho inventory?

We are often using Zoho Books for estimates that then get converted to invoices within Books. We would like the sales team to migrate entirely to Zoho Inventory and no longer need to use Zoho Books so that they are only on one system. How are people managingRelative Date Searches

Currently in the search options, it has "date", "from date" and "to date". I think it would be great if there were options like "date greater than x days ago" and "date less than x days ago". I realise that as a once off you can just use the existingPerformance is degrading

We have used Mail and Cliq for about three years now. I used to use both on the browser. Both have, over the past 6 months, had a severe degradation in performance. I switched to desktop email, which appeared to improve things somewhat, although initialAsk the Experts 23: Customize, utilize, and personalize with Zoho Desk

Hello everyone! It's time for the next round of Ask the Experts (ATE). This month is all about giving Zoho Desk a complete makeover and making it truly yours. Rebrand Zoho Desk with your organization’s details, customize ticket settings based on yourDear Zoho CEO: Business Growth is about how you prioritise!

All of us in business know that when you get your priorities right, your business grows. Zoho CRM and Zoho Books are excellent products, but sadly, Zoho Inventory continues to lag behind. Just this morning, I received yet another one-sided email aboutPlease review and re-enable outgoing emails for my domain

Hello Zoho Support, I have recently purchased a new domain and set up email hosting with Zoho. However, my account shows "Outgoing Email Blocked". I am a genuine user and not sending bulk/spam emails. Please review and re-enable outgoing emails for myPayroll without tax integrations (i.e. payroll for international)

It seems as though Zoho waits to develop integrations with local tax authorities before offering Zoho Payroll to Zoho customers in a country. Please reconsider this approach. We are happy Zoho Books customers, but unhappy that we have to run payroll ingoingout e mail block

info@ozanrade.com.trIncoming mails blocked

Zoho User ID : 60005368884 My mail Id is marketing#axisformingtechnology.com .I am getting following message "Your Incoming has been blocked and the emails will not be fetched in your Zoho account and POP Accounts. Click here to get unblocked." PleaseConfiguring Email Notifications with Tautulli for Plex

Hi I'm new to Zoho. I am from Canada and I have a I use a web based application called Tautulli for Plex that monitors my Plex media server. It also sends a newsletter to my followers. To set this up they require a "From" email address., a smtp serverHow to Set Up Zoho Mail Without Cloudflare on My Website

I'm having some trouble with Cloudflare here in Pakistan. I want to configure Zoho Mail for my domain, but I'm not sure how to set it up without going through Cloudflare. My website, https://getcrunchyrollapk.com/ , is currently using CF, but I'd likeSpam is Being Forwarded

I am filtering a certain sender directly to the Trash folder. Those messages are still being forwarded. Is this supposed to happen?IMAP Block

My two accounts have been blocked and I am not able to unblocked them myself. Please respond to email, I am traveling and this is urgent."DKIM not configured"

Hello. I have been attempting get the DKIM verified but Toolkit keeps sending the message that it is not configured, but both Namecheap and Zoho show it as configured properly. What am I missing?Zoho mail with custom domain not receiving email

i registered zoho mail with my own domain. I can login and access the mail app. I tried to send email from an outlook email account and an icloud email account. Both emails were not received. My plan is free. I also tried to send email from this zohoSet connection link name from variable in invokeurl

Hi, guys. How to set in parameter "connection" a variable, instead of a string. connectionLinkName = manager.get('connectionLinkName').toString(); response = invokeurl [ url :"https://www.googleapis.com/calendar/v3/freeBusy" type :POST parameters:requestParams.toString()Waterfall Chart

Hello, I would like to create a waterfall chart on Zoho analytics which shows the movement in changes of budget throughout a few months, on a weekly basis. Should look something like the picture below. Does anyone know how to?Issue with Importing Notes against Leads

Hi, I am attempting to import some Notes into Zoho CRM which need to be assigned to various Leads. I have created a csv file which I am testing that contains just one record. This csv file contains the following columns: Note Id Parent Id (I haveTrigger a Workflow Function if an Attachment (Related List) has been added

Hello, I have a Case Module with a related list which is Attachment. I want to trigger a workflow if I added an attachment. I've seen some topics about this in zoho community that was posted few months ago and based on the answers, there is no triggerOption to Hide Project Overview for Client Profiles

In Zoho Projects, the Project Overview section is currently visible to client profiles by default. While user profiles already have the option to restrict or hide access to the project overview, the same flexibility isn’t available for client profiles.Creator Add Records through API - Workflows not triggered ?

Hi Everyone, I am trying to add records to my Creator application through a third party app that I am developing. Currently, I am testing this through Postman. The records I need to add have a lot of workflows to run to populate dropdowns, fields, useImportant Update: Changes to Google Translate Support in Zoho SalesIQ

We’re updating our default chat translation options across all Zoho SalesIQ data centres to offer a more secure, in-house solution for your translation needs. What’s changing? We will be offering Zoho Translate (our in-house tool) as the default translationZoho CRM Community Digest - July P1 | 2025

Hey everyone, The start of July 2025 marked a special milestone: 200 posts in our Kaizen series! For those new here, Kaizen is a go-to series for Zoho CRM developers, where we regularly share best practices, tips, and expert insights to help you buildWhat’s New in Zoho Expense (April – July 2025)

Hello users, We're happy to bring you the latest updates and enhancements we've made to Zoho Expense over the past three months, which include introducing the Trip Expense Summary report in Analytics, extending Chatbot support to more editions, rollingWeekly Tips: Insert frequently used phrases in a jiffy using Hotkeys

You often find yourself using similar phrases in an email —like confirming appointments or providing standard information. Constantly switching between the mouse and keyboard interrupts your flow and slows you down.Instead of typing the same phrases overUndo Command in Notebook

It would be handy to have an Undo in the row of icons at the bottom of the notes.Narrative 9: GC—Meaningful conversations that benefit your customers

Behind the scenes of a successful ticketing system - BTS Series Narrative 9: GC—Meaningful conversations that benefit your customers Customers often seek immediate solutions, which is why self-service options are essential. Guided Conversations providesFSM App Oddity

We recently rolled out FSM to our technicians, and only one technician is having an issue. He's using an iPhone with iOS 18.6 installed. When he goes to service appointments using the calendar icon at the bottom of the app, he gets a list view only. Typically,Cliq Not Working !

Zoho Cliq has been experiencing connectivity issues since this morning. The app is unable to establish a connection with the server.Injecting CSS into ZML pages

Is there a way to inject CSS into ZML pages? Use case: 1. Dashboard displays 'Recent Activities' card displaying a list of newly added records 2. Each item in list links to the record onClick 3. When a user points the cursor over an item in the list,Power of Automation :: Automated Weekly Notifications for Unapproved Timesheets

Hello Everyone, A custom function is a software code that can be used to automate a process and this allows you to automate a notification, call a webhook, or perform logic immediately after a workflow rule is triggered. This feature helps to automateNext Page