Kaizen #123 Data Synchronization from a third party application

Welcome back to the Kaizen series!

In last week's post in the Kaizen series, we discussed one-way data synchronization from Zoho CRM to a legacy application, utilizing Zoho CRM's Bulk Read API and Notification API. This week, we will discuss data synchronization from a third party app to Zoho CRM.

The APIs available in Zoho CRM to insert, update or upsert records are :

1) Insert, Update, and Upsert Records APIs

These are synchronous APIs that are used to perform create, update or upsert operations for up to 100 records in a Zoho CRM module. While the Insert Records API and Update Records API is used to create and update a record respectively, the Upsert Records API is used to upsert (insert or update) a record depending on the availability of the record in your org . In Upsert Records API, the system checks for duplicate records based on the values in the duplicate_check_fields json array in the input json. If the record is already present, it gets updated. If not, the record is inserted. You will get the status of these operations immediately. Read more about the Upsert Records API in this Kaizen post.

2) Bulk Write API

The Bulk Write API is an asynchronous API that is used to perform create/update/upsert operations for up to 25K records in a Zoho CRM module. A background job will be scheduled in Zoho CRM. You can check the status of the scheduled job using the Bulk Write Job Details API. Alternatively, you can configure a callback URL when you schedule the job. The details of the Bulk Write Job result are posted on the callback URL upon successful completion or failure of the job. This bulk write API involves the following steps:

- Preparing the CSV input file

- Uploading the CSV file

- Creating a bulk write job

- Checking the job's status

- Downloading the result

To learn more about these steps in the Bulk Write API and about the Bulk Write API in general, refer to the Kaizen post on the Bulk Write API.

Insert, Update, Upsert Records | Bulk Write API |

Insert, update, or upsert up to 100 records per API call. | Insert, update, or upsert up to 25000 records per API call. |

The response is available instantly. | The response to the Bulk Write API request will not be available immediately. You can check the status of the job by polling for it, or you can wait for the status to be available in the callback URL after the job has completed. |

These APIs consume one credit per 10 records. | Bulk Write API consumes 500 credits per API call. |

Input should be in JSON format | The input file should be in CSV format. |

Let us now examine four different scenarios for record insertion in a module, specifically with varying quantities: 100, 2000, 5000, and 25000 records, and discover how these APIs perform in terms of number of API calls and credits consumed.

Number of Records | Insert,Update or Upsert Records API | Bulk Write API |

100 | 1 API Call 10 API Credits | 1 API Call 500 API Credits |

2000 | 20 API Calls 200 API Credits | 1 API Call 500 API Credits |

5000 | 50 API Calls 500 API Credits | 1 API Call 500 API Credits |

25000 | 250 API Calls 2500 API Credits | 1 API Call 500 API Credits |

Clearly, if you have more than 5000 records, it is advisable to use the bulk write API, given that you are ok with the operation being run asynchronously. If you want instant results, you should use insert/update/upsert APIs. However, more API credits will be consumed in this case.

Data Sync

Consider an e-commerce company where the Order management team uses a legacy system and the Accounts team uses Zoho CRM. To ensure that the Accounts team works with near real-time data in Zoho CRM, data synchronization from the legacy application is essential. Assume that in Zoho CRM, there is a custom module named "Orders" that contains the details from the legacy system. Imagine that you are in charge of the data synchronization between Zoho CRM and the legacy system. How should you call these APIs to achieve this synchronization? The synchronization process involves two steps.

Step 1: Initial Data Sync : Initial data sync from the legacy system to Zoho CRM.

Step 2: Subsequent Data Sync : Push any new orders or updated orders to Zoho CRM.

The solution we propose here is one of the many ways in which the data sync can be achieved.

This solution is built on the below three key points:

1) Unique field in Order Module: Maintain a unique field "parent_id" in the "Orders" module. The unique identifier of the orders in your legacy system should be mapped to this parent_id field. In create bulk write job, this can be achieved by the section field_mappings. For ensuring correct working of upsert operations, you can make use of "find_by" field inside resource JSON Array in the Create Bulk Write Job API Request. You should provide the value of "find_by" as the unique field "parent_id" . To read more about find_by field refer to Create Bulk Write Job page.

2) Sync Flag in the Orders table in legacy system: For the sync, maintain a flag in your legacy system orders table for indicating whether the record is synced with Zoho CRM or not. Once the sync is done, update the flag to true. Whenever a record is modified or sync fails, the flag value should be updated to false. This ensures that in case a record that is synced gets updated during the initial sync process, this flag column helps us re-sync this record again.

3) Grouping of data to batches: For initial sync, the data volume in the table will be huge. So they have to be batched in a group of 25k records. This grouping can be done based on sorting the table with a particular column & filtering records based on the sync column with criteria sync=false.

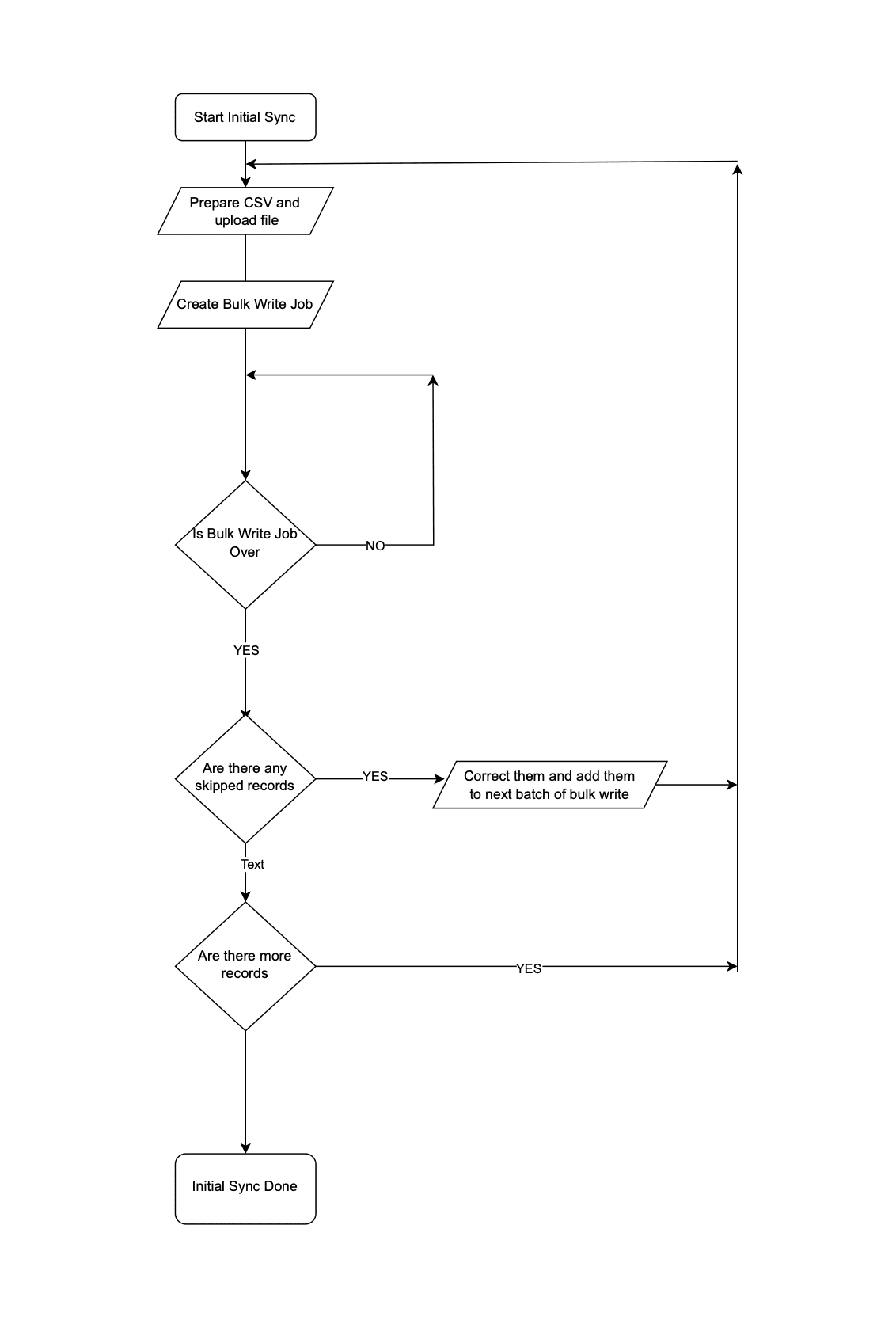

Step 1: Initial Data Sync

Since you have multiple batches of 25k records, you have to iterate them one by one and send them to the Bulk Write API.

Initial Data Sync

Initial Data SyncStep 2: Subsequent Data Sync

The Order Management team continues to use the legacy application, leading to continuous creation or update of records in the Orders module.

It is not recommended to invoke Upsert Records API whenever an operation happens in Orders in the legacy system. Subsequent data syncs can be done in two ways: either by (a)near real-time sync or (b) Interval based sync.

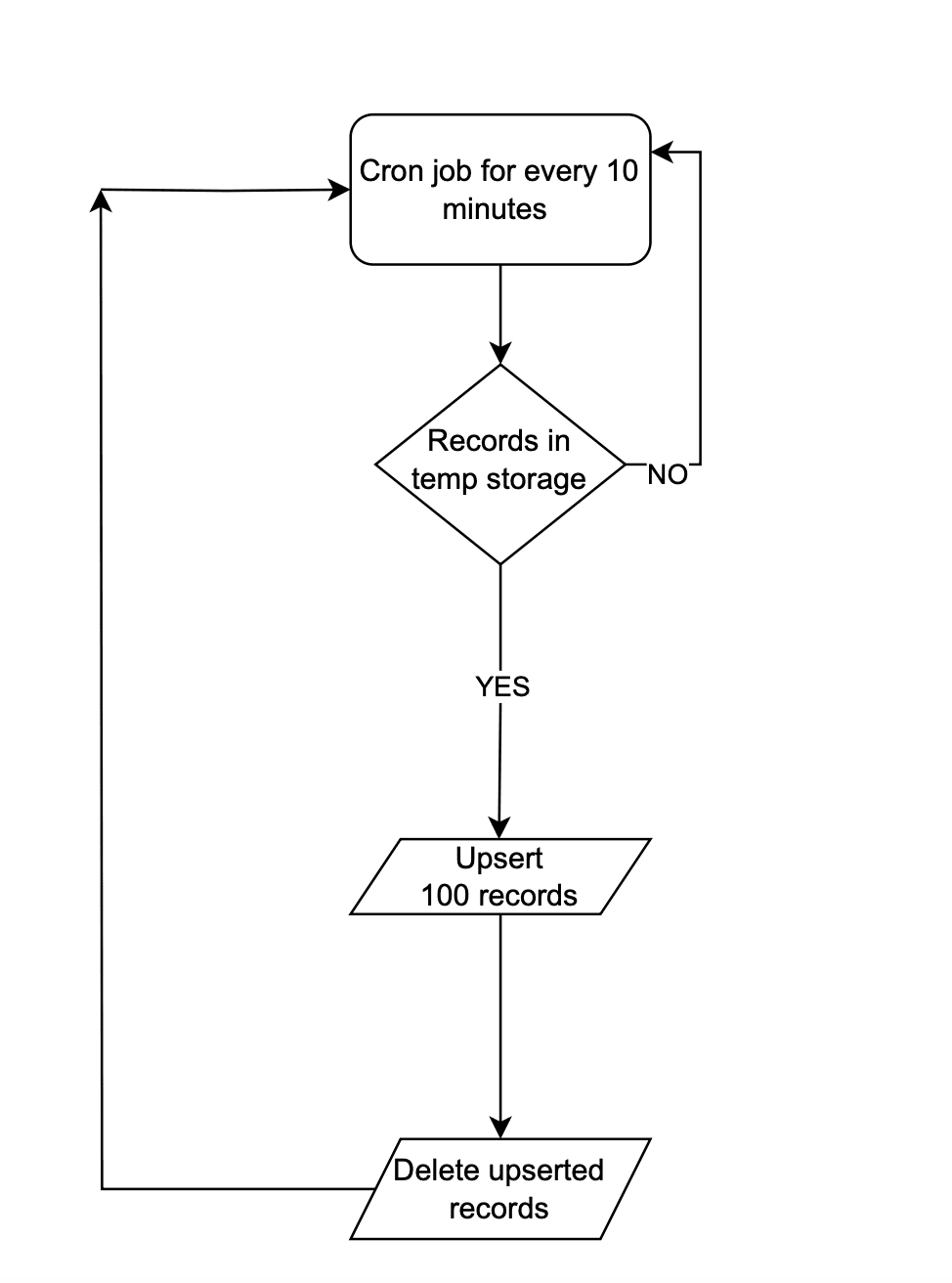

(a) Near Real-time Sync

As we want near real-time data in Zoho CRM, it is ok for the records that are updated in the legacy system from last synchronization to be unavailable in Zoho CRM for 10 minutes.

Near Real-time Sync

Near Real-time SyncInstead of making API calls to Zoho CRM on every data operation, store the data in a temporary data store like a DB table or Redis. Once 100 records are accumulated in that data-store, the Upsert Records API can be promptly invoked with all these record details and followed by emptying of the data store. This approach will lead to lower consumption of API credits.

There are chances that in a period of 10 mins, 100 record operations may not happen. Scheduler jobs or CRON jobs can be created to handle the records in the data store to update records to Zoho CRM. Once these records are handled, the data store should be emptied.

(b) Interval based synchronization

For interval based synchronization, schedule bulk write API calls at suitable intervals to upsert the Orders module in Zoho CRM. Whenever there is a record operation in the legacy table, update the sync flag to "false". At regular intervals, such as every 12 or 24 hours, the bulk write API or Upsert Records API can be employed depending on the volume of the data changes.

We hope you found this article useful. We will be back next week with another interesting topic. If you have any questions, write to us at support@zohocrm.com or let us know in the comment section.

Previous Kaizen Post: Kaizen #122 - Data Synchronization using Bulk Read and Notification APIs

Related Links

Centralize Knowledge. Transform Learning.

All-in-one knowledge management and training platform for your employees and customers.

New to Zoho Recruit?

Zoho Developer Community

New to Zoho LandingPage?

Zoho LandingPage Resources

New to Bigin?

Topic Participants

Mable Mary M P

Sticky Posts

Kaizen #198: Using Client Script for Custom Validation in Blueprint

Nearing 200th Kaizen Post – 1 More to the Big Two-Oh-Oh! Do you have any questions, suggestions, or topics you would like us to cover in future posts? Your insights and suggestions help us shape future content and make this series better for everyone.Kaizen #226: Using ZRC in Client Script

Hello everyone! Welcome to another week of Kaizen. In today's post, lets see what is ZRC (Zoho Request Client) and how we can use ZRC methods in Client Script to get inputs from a Salesperson and update the Lead status with a single button click. In thisKaizen #222 - Client Script Support for Notes Related List

Hello everyone! Welcome to another week of Kaizen. The final Kaizen post of the year 2025 is here! With the new Client Script support for the Notes Related List, you can validate, enrich, and manage notes across modules. In this post, we’ll explore howKaizen #217 - Actions APIs : Tasks

Welcome to another week of Kaizen! In last week's post we discussed Email Notifications APIs which act as the link between your Workflow automations and you. We have discussed how Zylker Cloud Services uses Email Notifications API in their custom dashboard.Kaizen #216 - Actions APIs : Email Notifications

Welcome to another week of Kaizen! For the last three weeks, we have been discussing Zylker's workflows. We successfully updated a dormant workflow, built a new one from the ground up and more. But our work is not finished—these automated processes are

New to Zoho TeamInbox?

Zoho TeamInbox Resources

Zoho CRM Plus Resources

Zoho Books Resources

Zoho Subscriptions Resources

Zoho Projects Resources

Zoho Sprints Resources

Qntrl Resources

Zoho Creator Resources

Zoho CRM Resources

Zoho Show Resources

Get Started. Write Away!

Writer is a powerful online word processor, designed for collaborative work.

Zoho CRM コンテンツ

-

オンラインヘルプ

-

Webセミナー

-

機能活用動画

-

よくある質問

-

Ebook

-

-

Zoho Campaigns

- Zoho サービスのWebセミナー

その他のサービス コンテンツ

Nederlandse Hulpbronnen

ご検討中の方

Recent Topics

is there any way to change the "chat with us now" to custom message?

is there any way to change the "chat with us now" to custom message? I want to change this textDeprecation Notice: OpenAI Assistants API will be shut down on August 26, 2026

I recieved this email from openAI what does it means for us that are using the integration and what should we do? Earlier this year, we shared our plan to deprecate the Assistants API once the Responses API reached feature parity. With the launch of Conversations,Capture Last check-in date & days since

I have two custom fields on my Account form, these are "Date of Last Check-In" and "Days Since Last Contact" Using a custom function how can I pull the date from the last check-in and display it in the field "Date of Last Check-In"? and then also display the number of days since last check-in in the "Days SInce Last Contact" field? I tried following a couple of examples but got myself into a bit of a muddle!Why is the ability Customize Calls module so limited?

Why can't I add additional sections? why can't I add other field types than the very limited subset that zoho allows? Why can I only add fields to the outbound/inbound call sections and not to the Call Information section?Zoho Desk - Upsert Ticket

Hi Desk Team, It is common to request more information from end-users. Using forms is a great way to ensure all the required information is collected. It would be great if there were an "upsert" option on the Zoho Form -> Zoho Desk integration which wouldCRM gets location smart with the all new Map View: visualize records, locate records within any radius, and more

Hello all, We've introduced a new way to work with location data in Zoho CRM: the Map View. Instead of scrolling through endless lists, your records now appear as pins on a map. Built on top of the all-new address field and powered by Mappls (MapMyIndia),Enhance Appointment Buffers in Zoho Bookings

There was previously a long-standing feature request related to enhancing the way appointment buffers work in Zoho Bookings, but it looks like the original post has been deleted. I am therefore adding a new request that Zoho Bookings adjust how appointmentSubscriptions for service call

So we install products and we want to offer a service contract for the customers yearly service calls to be billed monthly. So ideally at some point we want to email them a quote for their needs. WE will choice it our end based on the equipment. It wouldConnection to other user

Zoho Cliq handles sharing of Custom OAuth Connections that require individual user logins.How to invite friends on other social media platforms to one of my group chats in arattai?

Hello, I have formed chat groups in arattai. I want to invite my friends on other social media platforms like WhatsApp/ FB to one of my groups. Different friends would be invited to different groups. How to share an invite link of one of my groups toCliq does not sync messages after Sleep on Mac

I'm using the mac app of Cliq. When I open my mac after it was in sleep mode, Cliq does not sync the messages that I received. I always have to reload using cmd + R, which is not what I want when using a chat application.Creator Offline

We had online access setup and working on our iphones. We have just set it up on an 'Android Tablet' and it is not downloading all the images? We use it to show customers our catalogue. Any ideas. Offline components all setup on both devicesOptimum CRM setup for new B2B business

Can some advise the most common way to setup Zoho CRM to handle sales for a B2B company? Specifically in how to handle inbound/outbound emails. I have spent hours researching online and can't seem to find an accepted approach, or even a tutorial. I haveFacing Issues with Sites Mobile font sizes

my page renediaz.com is facing issues mobile view, when i try to lower font sizes in home page, instead of changing the size, it changes the line spaceDoes the Customer “Company Name” field appear anywhere in the Zoho Books UI outside of PDFs?

Hi everyone, I’m trying to understand how the Company Name field is actually used in Zoho Books. There is a Company Name field on the customer record, but when viewing transactions like a Sales Order in the normal UI (non-PDF view), that field doesn’tSet expiration date on document and send reminder

We have many company documents( for example business registration), work VISA documents. It will be nice if we can set a expiry date and set reminders ( for example 90 days, 60 days, 30 days etc.,) Does Zoho workdrive provide that option?Analytics : How to share to an external client ?

We have a use case where a client wants a portal so that several of his users can view dashboards that we have created for them in Zoho Analytics. They are not part of our company or Zoho One account. The clients want the ability to have user specific,Automatically Update Form Attachment Service with Newly added Fields

Hi, When I have a Form Setup and connected to a 3rd Party Service such as OneDrive for Form Attachments, when I later add a new Upload Field I have to remove and redo the entire 3rd Party Setup from scratch. This needs to be improved, such as when newZoho Sheet for Desktop

Does Zoho plans to develop a Desktop version of Sheet that installs on the computer like was done with Writer?Payroll and BAS ( Australian tax report format )

Hello , I am evaluating Zoho Books and I find the interface very intuitive and straight forward. My company is currently using Quickbooks Premier the Australian version. Before we can consider moving the service we would need to have the following addressed : 1.Payroll 2.BAS ( business activity statement ) for tax purposes 3.Some form of local backup and possible export of data to a widely accepted format. Regards Codrin MitinZoho Desk API - Send Reply to CUSTOMERPORTAL

Hello! I'll try to send a reply to Customer Portal, But the response is 500 (INTERNAL_SERVER_ERROR in service response). {"Error":"{\"errorCode\":\"INTERNAL_SERVER_ERROR\",\"message\":\"An internal server error occurred while performing this operation.\"}"}Python - code studio

Hi, I see the code studio is "coming soon". We have some files that will require some more complex transformation, is this feature far off? It appears to have been released in Zoho Analytics alreadyDheeraj Sudan and Meenu Hinduja-How do I customize Zoho apps to suit my needs?

Hi Everyone, I'm Meenu Hinduja and my husband Dheeraj Sudan, run a business. I’m looking to tweak a few things to fit my needs, and I’d love to hear what customizations others have done. Any tips or examples would be super helpful! Regards Dheeraj SudanFrom Zoho CRM to Paper : Design & Print Data Directly using Canvas Print View

Hello Everyone, We are excited to announce a new addition to your Canvas in Zoho CRM - Print View. Canvas print view helps you transform your custom CRM layouts into print-ready documents, so you can bring your digital data to the physical world withZoho Books Payroll

How am I supposed to do payroll and pay my employees with Zoho Books? I think it's pretty strange that an accounting software doesn't have the ability to perform one of the most common functions in business; paying your employees. Am I missing something,Layout Rules Don't Apply To Blueprints

Hi Zoho the conditional layout rules for fields and making fields required don't work well with with Blueprints if those same fields are called DURING a Blueprint. Example. I have field A that is used in layout rule. If value of field A is "1" it is supposed to show and make required field B. If the value to field A is "2" it is supposed to show and make required field C. Now I have a Blueprint that says when last stage moves to "Closed," during the transition, the agent must fill out field A. Nowvalidation rules doesn't work in Blueprint when it is validated using function?

I have tried to create a validation rule in the deal module. it works if I try to create a deal manually or if I try to update the empty field inside a deal. but when I try to update the field via the blueprint mandatory field, it seems the validationSort Legend & stacked bar chart by value

I'd love to see an option added to sort the legend of graphs by the value that is being represented. This way the items with the largest value in the graph are displayed top down in the legend. For example, let's say I have a large sales team and I createIs It Possible to Convert a Custom Module to a Quote?

I recently created a custom module in our CRM environment for RFQs so that our sales team can submit quote requests directly in Zoho rather than by email/phone. This allows for a cleaner overall process, minimizing potential errors and potentially encouragingAll new Address Field in Zoho CRM: maintain structured and accurate address inputs

The address field will be available exclusively for IN DC users. We'll keep you updated on the DC-specific rollout soon. It's currently available for all new sign-ups and for existing Zoho CRM orgs which are in the Professional edition. Latest updateHow can Data Enrichment be automatically triggered when a new Lead is created in Zoho CRM?

Hi, I have a pipeline where a Lead is created automatically through the Zoho API and I've been trying to look for a way to automatically apply Data Enrichment on this created lead. 1) I did not find any way to do this through the Zoho API; it seems likeClient Side Scripts for Meetings Module

Will zoho please add client side scripting support to the meetings module? Our workflow requires most meeting details have a specific format to work with other software we have. So we rely on a custom function to auto fill certain things. We currentlyProposal: Actionable API Error Messages to Reduce Support Ticket Volume

I've encountered a long-standing frustration with how Zoho Creator's API communicates errors to developers, and I'm hoping the Zoho team can address this in a future update. This issue has persisted for over 9 years based on community discussions, andZoho CRM - Option to create Follow-Up Task

When completing a Zoho CRM Task, it would be very helpful if there was an option to "Complete and Create Follow-Up Task" in the pop-up which appears. It could clone the task you are closing and then show it on the screen in edit mode, all the user wouldDynamic Field Folders in OneDrive

Hi, With the 2 options today we have either a Dynamic Parent Folder and lots of attachments all in that one folder with only the ability to set the file name (Which is also not incremented so if I upload 5 photos to one field they are all named the sameDrag 'n' Drop Fields to a Sub-Form and "Move Field To" Option

Hi, I would like to be able to move fields from the Main Page to a Sub-Form or from a Sub-Form to either the Main Page or another Sub-Form. Today if you change the design you have to delete and recreate every field, not just move them. Would be nice toFile Conversion from PDF to JPG/PNG

Hi, I have a question did anyone every tried using custom function to convert a PDF file to JPG/PNG format? Any possibility by using the custom function to achieve this within zoho apps. I do know there are many third parties API provide this withAllocating inventory to specific SO's

Is there a way that allocate inventory to a specific sales order? For example, let's say we have 90 items in stock. Customer 1 orders 100 items. This allocates all 90 items to their order, and they have a back order for the remaining 10 items which couldCliq iOS can't see shared screen

Hello, I had this morning a video call with a colleague. She is using Cliq Desktop MacOS and wanted to share her screen with me. I'm on iPad. I noticed, while she shared her screen, I could only see her video, but not the shared screen... Does Cliq iOS is able to display shared screen, or is it somewhere else to be found ? RegardsPull cells from one sheet onto another

Hello all! I have created an ingredients database where i have pricing and information and i want to pull from that database into a recipe calculator. I want it to pull based on what ingredient I choose. The ingredients database has an idea and i wantNext Page