Kaizen #131 - Bulk Write for parent-child records using Scala SDK

Hello and welcome back to this week's Kaizen!

Last week, we discussed how to configure and initialize the Zoho CRM Scala SDK. This week, we will be exploring the Bulk Write API and its capabilities. Specifically, we will focus on executing bulk write operations for parent-child records in a single operation, and how to do this using Scala SDK.

Quick Recap of Bulk Write API

Bulk Write API facilitates efficient insertion, updation, or upsertion of large datasets into your CRM account. It operates asynchronously, scheduling jobs to handle data operations. Upon completion, notifications are sent to the specified callback URL or the job status can be checked periodically.

When to use Bulk Write API?

- When scheduling a job to import a massive volume of data.

- When needing to process more than 100 records in a single API call.

- When conducting background processes like migration or initial data sync between Zoho CRM and external services.

Steps to Use Bulk Write API:

- Prepare CSV File: Create a CSV file with field API names as the first row and data in subsequent rows.

- Upload Zip File: Compress the CSV file into a zip format and upload it via a POST request.

- Create Bulk Write Job: Use the uploaded file ID, callback URL, and field API names to create a bulk write job for insertion, update, or upsert operations.

- Check Job Status: Monitor job status through polling or callback methods. Status could be ADDED, INPROGRESS, or COMPLETED.

- Download Result: Retrieve the result of the bulk write job, typically a CSV file with job details, using the provided download URL.

In our previous Kaizen posts - Bulk Write API Part I and Part II, we have extensively covered the Bulk Write API, complete with examples and sample codes for the PHP SDK. We highly recommend referring to those posts before reading further to gain a better understanding of the Bulk Write API.

With the release of our V6 APIs, we have introduced a significant enhancement to our Bulk Write API functionality. Previously, performing bulk write operations required separate API calls for parent and each child module. But with this enhancement, you can now import them all in a single, operation or API call.

Field Mappings for parent-child records in a single API call

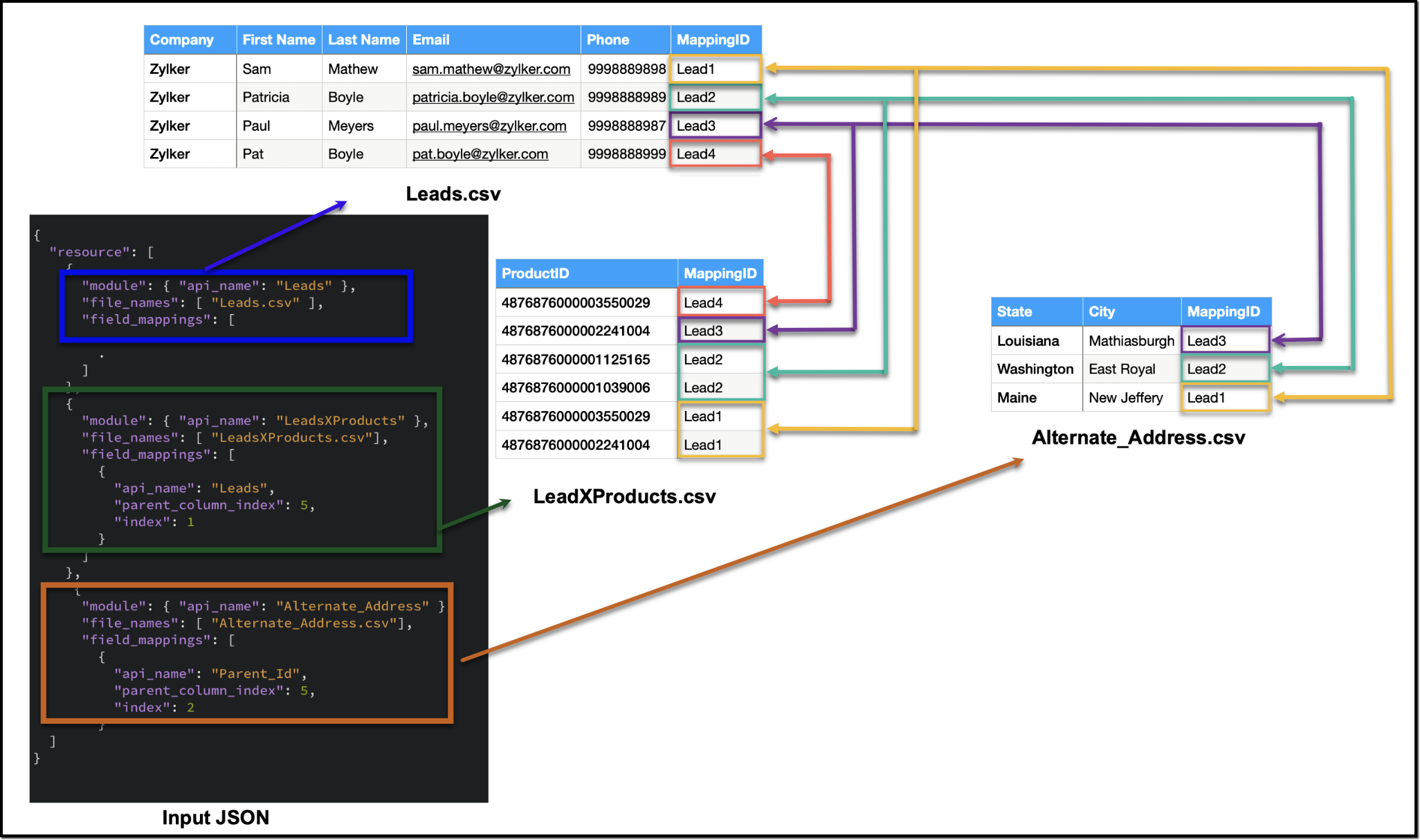

When configuring field mappings for bulk write operations involving parent-child records in a single API call, there are two key aspects to consider: creating the CSV file containing the data and constructing the input JSON for the bulk write job.

Creating the data CSV file:

To set up the data for a bulk write operation involving parent-child records, you need to prepare separate CSV files - one for the parent module records, and one each for each child module records. In these CSV files, appropriate field mappings for both parent and child records need to be defined.

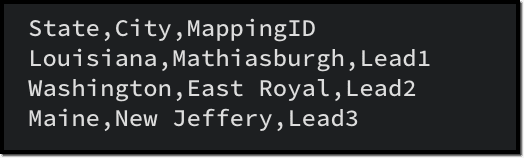

The parent CSV file will contain the parent records, while the child CSV file will contain the child records. To make sure that each child record is linked to its respective parent record, we will add an extra column (MappingID in the image below) to both the parent and child CSV files. This column will have a unique identifier value for each parent record. For each record in the child CSV file, the value in the identifier column should match the value of the identifier of the parent record in the parent CSV file. This ensures an accurate relationship between the parent and child records during the bulk write operation.

Please be aware that the mapping of values is solely dependent on the mappings defined in the input JSON. In this case, the column names in the CSV file serve only as a reference for you. Please refer to the notes section towards the end of this document for more details.

Creating the CSV file remains consistent across all types of child records, and we have already discussed how each child record is linked to its respective parent record in the CSV file. To facilitate the same linkage in the input JSON, we have introduced a new key called parent_column_index. This key assists us in specifying which column in the child module's CSV file contains the identifier or index linking it to the parent record. In the upcoming sections, we will explore preparing the input JSON for various types of child records.

Additionally, since we have multiple CSV files in the zip file, we have introduced another new key named file_names in resources array. file_names helps in correctly mapping each CSV file to its corresponding module.

Ensure that when adding parent and child records in a single operation, the parent module details should be listed first, followed by the child module details in the resource array of the input body.

1. Multiselect Lookup Fields

In scenarios involving multiselect lookup fields, the Bulk Write API now allows for the import of both parent and child records in a single operation.

In the context of multiselect lookup fields, the parent module refers to the primary module where the multiselect lookup field is added. For instance, in our example, consider a multiselect lookup field in the Leads module linking to the Products module.

Parent Module : Leads

Child module : The linking module that establishes the relationship between the parent module and the related records (LeadsXProducts)

Here are the sample files for the "LeadsXProducts" case:

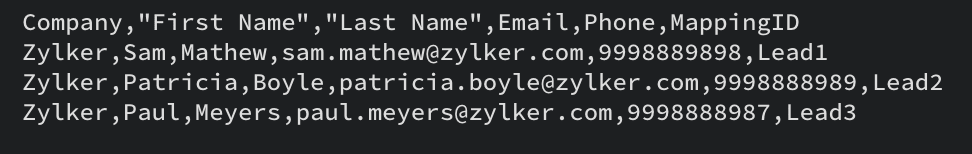

Leads.csv (Parent)

LeadsXProducts.csv (Child)

Given below is a sample input JSON for this bulk write job. Please note that the index of the child linking column should be mapped under the key index, and the index of the parent column index should be mapped under parent_column_index.

To map the child records to their corresponding parent records (linking module), you must use the field API name of the lookup field that links to the parent module. For example, in this case, the API name of the lookup field linking to the Leads module from the LeadsXProducts is Leads.

{ "operation": "insert", "ignore_empty": true, "callback": { "url": "http://www.zoho.com", "method": "post" }, "resource": [ { "type": "data", "module": { "api_name": "Leads" //parent module API name }, "file_id": "4876876000006855001", "file_names": [ "Leads.csv" //parent records CSV file ], "field_mappings": [ // field mappings for the parent record fields { "api_name": "Company", //field API name "index": 0 //index in the CSV file }, { "api_name": "First_Name", "index": 1 }, { "api_name": "Last_Name", "index": 2 }, { "api_name": "Email", "index": 3 }, { "api_name": "Phone", "index": 4 } ] }, { "type": "data", "module": { "api_name": "LeadsXProducts" //child module API name }, "file_id": "4876876000006855001", "file_names": [ "LeadsXProducts.csv" //child records CSV file ], "field_mappings": [ { "api_name": "Products", "find_by": "id", "index": 0 }, { "api_name": "Leads", //field API name of the lookup field in the Linking Module "parent_column_index": 5, // the index of the identifier column in the parent CSV file "index": 1 //index of the identifier column in the child CSV file } ] } ] } |

The following is a sample code snippet for the Scala SDK, to achieve the same functionality. Find the complete code here.

var module = new MinifiedModule() // Create a new instance of MinifiedModule module.setAPIName(Option("Leads")) // Set the API name for the module to "Leads" resourceIns.setModule(Option(module)) resourceIns.setFileId(Option("4876876000006899001")) // Set the file ID for the resource instance resourceIns.setIgnoreEmpty(Option(true)) var filenames = new ArrayBuffer[String] // Create a new ArrayBuffer to store file names filenames.addOne("Leads.csv") resourceIns.setFileNames(filenames) // Set the file names for the resource instance // Create a new ArrayBuffer to store field mappings var fieldMappings: ArrayBuffer[FieldMapping] = new ArrayBuffer[FieldMapping] // Create a new FieldMapping instance for each field var fieldMapping: FieldMapping = null fieldMapping = new FieldMapping fieldMapping.setAPIName(Option("Company")) fieldMapping.setIndex(Option(0)) fieldMappings.addOne(fieldMapping) . . // Set the field mappings for the resource instance resourceIns.setFieldMappings(fieldMappings) resource.addOne(resourceIns) requestWrapper.setResource(resource) resourceIns = new Resource resourceIns.setType(new Choice[String]("data")) module = new MinifiedModule() module.setAPIName(Option("LeadsXProducts")) resourceIns.setModule(Option(module)) resourceIns.setFileId(Option("4876876000006899001")) resourceIns.setIgnoreEmpty(Option(true)) filenames = new ArrayBuffer[String] filenames.addOne("LeadsXProducts.csv") resourceIns.setFileNames(filenames) fieldMappings = new ArrayBuffer[FieldMapping] fieldMapping = new FieldMapping fieldMapping.setAPIName(Option("Products")) fieldMapping.setFindBy(Option("id")) fieldMapping.setIndex(Option(0)) fieldMappings.addOne(fieldMapping) fieldMapping = new FieldMapping fieldMapping.setAPIName(Option("Leads")) //Specify the API name of the lookup filed in the Linking Module fieldMapping.setParentColumnIndex(Option(5)) //Specify the index of the identifier column in the parent CSV file fieldMapping.setIndex(Option(1)) //Specify the index of the identifier column in the child CSV file fieldMappings.addOne(fieldMapping) resourceIns.setFieldMappings(fieldMappings) resource.addOne(resourceIns) requestWrapper.setResource(resource) |

2. Multi-User Lookup fields

In case of multi-user lookup fields, the parent module remains the module where the multi-user field is added. The child module is the lookup module created to facilitate this relationship.

For instance, let's consider a scenario where a multi-user field labeled Referred By is added in the Leads module, linking to the Users module.

Parent module : Leads

Child module : The linking module, LeadsXUsers.

To get more information about the child module, please utilize the Get Modules API. You can get the details of the fields within the child module using the Fields API.

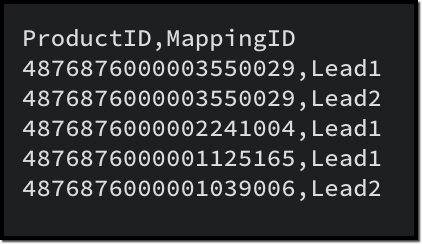

Here is a sample CSV for adding a multi-user field records along with the parent records:

LeadsXUsers.csv

Please ensure that you create a zip file containing the corresponding CSV files, upload it to the platform and then initiate the bulk write job using the file ID. The values for index and parent_column_index will vary based on your specific CSV files.

To create a bulk write job using Create Bulk Write job API, add the following code snippet to your resource array.

{ "type": "data", "module": { "api_name": "Leads_X_Users" // child module }, "file_id": "4876876000006887001", "file_names": [ "LeadsXUsers.csv" //child records CSV file name ], "field_mappings": [ { "api_name": "Referred_User", "find_by": "id", "index": 0 }, { "api_name": "userlookup221_11", //API name of the Leads lookup field in LeadsXUsers module "parent_column_index": 5, // the index of the identifier column in the parent CSV file "index": 1 // the index of the identifier column in the child CSV file } ] } |

To do the same using Scala SDK, add the following code snippet to your code:

resourceIns = new Resource resourceIns.setType(new Choice[String]("data")) module = new MinifiedModule() module.setAPIName(Option("Leads_X_Users")) resourceIns.setModule(Option(module)) resourceIns.setFileId(Option("4876876000006904001")) resourceIns.setIgnoreEmpty(Option(true)) filenames = new ArrayBuffer[String] filenames.addOne("LeadsXUsers.csv") resourceIns.setFileNames(filenames) fieldMappings = new ArrayBuffer[FieldMapping] fieldMapping = new FieldMapping fieldMapping.setAPIName(Option("Referred_User")) fieldMapping.setFindBy(Option("id")) fieldMapping.setIndex(Option(0)) fieldMappings.addOne(fieldMapping) fieldMapping = new FieldMapping fieldMapping.setAPIName(Option("userlookup221_11")) fieldMapping.setParentColumnIndex(Option(5)) fieldMapping.setIndex(Option(1)) fieldMappings.addOne(fieldMapping) resourceIns.setFieldMappings(fieldMappings) resource.addOne(resourceIns) requestWrapper.setResource(resource) |

3. Subform data

To import subform data along with parent records in a single operation, you must include both the parent and subform CSV files within a zip file and upload it. In this context, the parent module refers to the module where the subform is added, and the child module is the subform module.

For instance, consider a subform named Alternate Address in the Leads module, with fields such as City and State.

Parent module : Leads

Child module : Alternate_Address (api name of the Subform module).

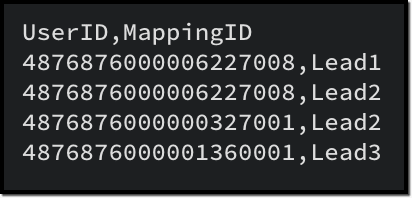

In the subform CSV file (Alternate_Address.csv), in addition to the data columns, include a column to denote the linkage to the parent record.

Once the zip file containing both the parent and subform CSV files is prepared, proceed to upload it to initiate the import process. When you create the bulk write job, ensure to specify the appropriate values for index and parent_column_index based on your specific CSV files in the input.

Here is a sample CSV for the subform data, corresponding to the parent CSV provided earlier.

Alternate_Address.csv

To create a bulk write job using Create Bulk Write job API to import the subform data, add the following code snippet to your resource array.

{ "type": "data", "module": { "api_name": "Alternate_Address" //Subform module API name }, "file_id": "4876876000006915001", "file_names": [ "Alternate_Address.csv" //child (subform) records CSV ], "field_mappings": [ { "api_name": "State", "index": 0 }, { "api_name": "City", "index": 1 }, { "api_name": "Parent_Id", //Leads lookup field in the subform module "parent_column_index": 5, "index": 2 } ] } |

To do the same using Scala SDK, add the following code snippet to your code:

resourceIns = new Resource resourceIns.setType(new Choice[String]("data")) module = new MinifiedModule() module.setAPIName(Option("Alternate_Address")) resourceIns.setModule(Option(module)) resourceIns.setFileId(Option("4876876000006920001")) resourceIns.setIgnoreEmpty(Option(true)) filenames = new ArrayBuffer[String] filenames.addOne("Alternate_Address.csv") resourceIns.setFileNames(filenames) fieldMappings = new ArrayBuffer[FieldMapping] fieldMapping = new FieldMapping fieldMapping.setAPIName(Option("State")) fieldMapping.setIndex(Option(0)) fieldMappings.addOne(fieldMapping) fieldMapping = new FieldMapping fieldMapping.setAPIName(Option("City")) fieldMapping.setIndex(Option(1)) fieldMappings.addOne(fieldMapping) fieldMapping = new FieldMapping fieldMapping.setAPIName(Option("Parent_Id")) fieldMapping.setParentColumnIndex(Option(5)) fieldMapping.setIndex(Option(2)) fieldMappings.addOne(fieldMapping) resourceIns.setFieldMappings(fieldMappings) resource.addOne(resourceIns) requestWrapper.setResource(resource) |

4. Line Items

To import line items along with the parent records, an approach similar to handling subform data is used. The parent module is the module housing the parent records, while the child module corresponds to the line item field.

For instance, in the Quotes module, to import product details within the record, the child module should be Quoted_Items.

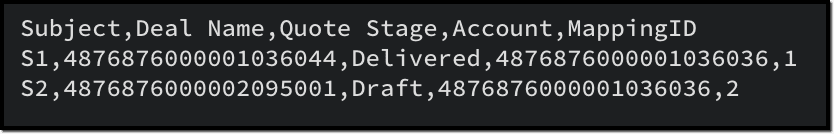

Here is a sample CSV for importing the parent records to the Quotes module:

Quotes.csv

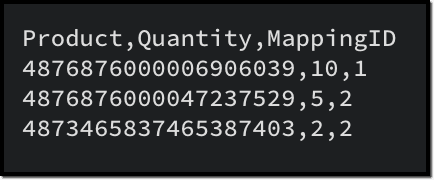

Given below is a sample CSV to add the product details in Quoted Items:

Quoted_Items.csv

Now to create a bulk write job for these records, here is a sample input JSON:

{ "operation": "insert", "ignore_empty": true, "callback": { "url": "http://www.zoho.com", "method": "post" }, "resource": [ { "type": "data", "module": { "api_name": "Quotes" }, "file_id": "4876876000006949001", "file_names": [ "Quotes.csv" ], "field_mappings": [ { "api_name": "Subject", "index": 0 }, { "api_name": "Deal_Name", "find_by" : "id", "index": 1 }, { "api_name": "Quote_Stage", "index": 2 }, { "api_name": "Account_Name", "find_by" : "id", "index": 3 } ] }, { "type": "data", "module": { "api_name": "Quoted_Items" }, "file_id": "4876876000006949001", "file_names": [ "Quoted_Items.csv" ], "field_mappings": [ { "api_name": "Product_Name", "find_by" : "id", "index": 0 }, { "api_name": "Quantity", "index": 1 }, { "api_name": "Parent_Id", "parent_column_index": 4, "index": 2 } ] } ] } |

To do the same using Scala SDK, add this code snippet to your file:

val bulkWriteOperations = new BulkWriteOperations val requestWrapper = new RequestWrapper val callback = new CallBack callback.setUrl(Option("https://www.example.com/callback")) callback.setMethod(new Choice[String]("post")) requestWrapper.setCallback(Option(callback)) requestWrapper.setCharacterEncoding(Option("UTF-8")) requestWrapper.setOperation(new Choice[String]("insert")) requestWrapper.setIgnoreEmpty(Option(true)) val resource = new ArrayBuffer[Resource] var resourceIns = new Resource resourceIns.setType(new Choice[String]("data")) var module = new MinifiedModule() module.setAPIName(Option("Quotes")) resourceIns.setModule(Option(module)) resourceIns.setFileId(Option("4876876000006953001")) resourceIns.setIgnoreEmpty(Option(true)) var filenames = new ArrayBuffer[String] filenames.addOne("Quotes.csv") resourceIns.setFileNames(filenames) var fieldMappings: ArrayBuffer[FieldMapping] = new ArrayBuffer[FieldMapping] var fieldMapping: FieldMapping = null fieldMapping = new FieldMapping fieldMapping.setAPIName(Option("Subject")) fieldMapping.setIndex(Option(0)) fieldMappings.addOne(fieldMapping) fieldMapping = new FieldMapping fieldMapping.setAPIName(Option("Deal_Name")) fieldMapping.setFindBy(Option("id")) fieldMapping.setIndex(Option(1)) fieldMappings.addOne(fieldMapping) fieldMapping = new FieldMapping fieldMapping.setAPIName(Option("Quote_Stage")) fieldMapping.setIndex(Option(2)) fieldMappings.addOne(fieldMapping) fieldMapping = new FieldMapping fieldMapping.setAPIName(Option("Account_Name")) fieldMapping.setIndex(Option(3)) fieldMapping.setFindBy(Option("id")) fieldMappings.addOne(fieldMapping) resourceIns.setFieldMappings(fieldMappings) resource.addOne(resourceIns) requestWrapper.setResource(resource) resourceIns = new Resource resourceIns.setType(new Choice[String]("data")) module = new MinifiedModule() module.setAPIName(Option("Quoted_Items")) resourceIns.setModule(Option(module)) resourceIns.setFileId(Option("4876876000006953001")) resourceIns.setIgnoreEmpty(Option(true)) filenames = new ArrayBuffer[String] filenames.addOne("Quoted_Items.csv") resourceIns.setFileNames(filenames) fieldMappings = new ArrayBuffer[FieldMapping] fieldMapping = new FieldMapping fieldMapping.setAPIName(Option("Product_Name")) fieldMapping.setFindBy(Option("id")) fieldMapping.setIndex(Option(0)) fieldMappings.addOne(fieldMapping) fieldMapping = new FieldMapping fieldMapping.setAPIName(Option("Quantity")) fieldMapping.setIndex(Option(1)) fieldMappings.addOne(fieldMapping) fieldMapping = new FieldMapping fieldMapping.setAPIName(Option("Parent_Id")) fieldMapping.setParentColumnIndex(Option(4)) fieldMapping.setIndex(Option(2)) fieldMappings.addOne(fieldMapping) resourceIns.setFieldMappings(fieldMappings) resource.addOne(resourceIns) requestWrapper.setResource(resource) |

Notes :

- When importing a single CSV file (parent or child module records separately), field_mappings is an optional key in the resource array. If you skip this key, the field mappings must be defined using the column names in the CSV file. In such cases, the column names should correspond to the field API names. Additionally, all columns should be mapped with the correct API names, and there should not be any extra unmapped columns.

- When importing parent and child records in a single API call, field_mappings is a mandatory key.

- The identifier column in the parent and child CSV can have different column names, as the mapping is done based on the input JSON.

Points to remember

- An uploaded file can be used for a single bulk write job only. If you want to retry the operation with the same data, upload the file again to generate a new file ID.

- When adding parent and child records in a single operation, ensure that the parent module comes first, followed by the child module details in the resource array of the input body.

- The parent and all child CSV files should be zipped into a single file and uploaded. You cannot use more than one zip file in a single bulk write job.

- Define appropriate mappings for both parent and child records using the parent_column_index and index key to establish the relationship.

- Utilize the resources > file_names key to map the correct CSV with the appropriate module

- For each parent in the parent records file:

- By default, the limit for Subforms and Line Items is set to 200. While you can configure this limit for subforms in the UI, customization options are not available for Line Items.

- MultiSelect Lookup fields have a maximum limit of 100. If you have more than 100 associations for a MultiSelect Lookup field, you may schedule additional bulk write jobs for the child records alone, importing 100 records at a time.

- The maximum limit for Multi-User Lookup fields is restricted to 10.

We hope that you found this post useful, and you have gained some insights into using the Bulk Write API effectively. If you have any queries, let us know in the comments below, or feel free to send an email to support@zohocrm.com. We would love to hear from you!

Recommended Reads :

- Check out our Kaizen Collection here

- Previous Kaizen : Zoho CRM Scala SDK (V6) - Configuration and Initialization

- Create a Bulk Write Job

- Kaizen #103 - Bulk Write API using PHP SDK (v5) - Part I

- Kaizen #104 - Bulk Write API using PHP SDK (v5) - Part II

Topic Participants

Anu Abraham

Andrea Dalseno

Calum Beck

Jeganprabhu S

Sticky Posts

Kaizen #152 - Client Script Support for the new Canvas Record Forms

Hello everyone! Have you ever wanted to trigger actions on click of a canvas button, icon, or text mandatory forms in Create/Edit and Clone Pages? Have you ever wanted to control how elements behave on the new Canvas Record Forms? This can be achievedKaizen #142: How to Navigate to Another Page in Zoho CRM using Client Script

Hello everyone! Welcome back to another exciting Kaizen post. In this post, let us see how you can you navigate to different Pages using Client Script. In this Kaizen post, Need to Navigate to different Pages Client Script ZDKs related to navigation A.Kaizen #210 - Answering your Questions | Event Management System using ZDK CLI

Hello Everyone, Welcome back to yet another post in the Kaizen Series! As you already may know, for the Kaizen #200 milestone, we asked for your feedback and many of you suggested topics for us to discuss. We have been writing on these topics over theKaizen #197: Frequently Asked Questions on GraphQL APIs

🎊 Nearing 200th Kaizen Post – We want to hear from you! Do you have any questions, suggestions, or topics you would like us to cover in future posts? Your insights and suggestions help us shape future content and make this series better for everyone.Kaizen #198: Using Client Script for Custom Validation in Blueprint

Nearing 200th Kaizen Post – 1 More to the Big Two-Oh-Oh! Do you have any questions, suggestions, or topics you would like us to cover in future posts? Your insights and suggestions help us shape future content and make this series better for everyone.

Recent Topics

Show backordered items on packing slip

We send out a lot of large orders, and often there are one or two things backordered. How can I fix the packing slips to show quantity ordered & quantity packed There should also be the ability to "ship" 0 of an item so the receiver knows that thingsDocument | Files

The vendor "Partial matches" still not fixed here after years of putting up with having to select most vendors manually ! ( again, the banking "Transaction rules" would solve a lot of these issues ) Some unwanted, irrelevant pdf's also arrive. It wouldbooks+POS+ tap to pay+ stripe

So in the UK we now have tap to pay with stripe. So we can use the stripe app as a POS terminal. Brilliant news. Can we hope that the Books App might add this feature ASAP. It would be great to have one system rather than using the not very good SquareCanvas Form View - Client Script Page on load - Url params not working

We have a custom module with a canvas page for form view. We have a button in Customers module to open this canvas page using invokeurl function. We send few parameters as in the URL as query parameters. https://crm.zoho.in/crm/orgxxxxxxxx/tab/CustomModule12/create/canvas/64333200000261xxxx?layoutId=643332000002605001&c=${Customers.CustomerSending Recruit SMS's to Zoho Cliq - Or tracking in the Messages module in Recruit?

Is there any way to send SMS Gateway messages in Recruit to ZOho Cliq? We use 2-way SMS massages a lot in Zoho Recruit to speed up communication with Candidates. However the only way to keep track of received SMS's is by keeping a look out for the EmailThis mobile number has been marked spam. Please contact support.

Hi Support, Can you tell me why number was marked as spam. I have having difficult to add my number as you keep requesting i must use it. My number is +63....163 Or is Zoho company excluding Philippines from their services?Is it possible to set a customer context variable in Zobot

Hi, I want to use a context variable to route users down different paths in my Zobot chat flow. I know I can do this when the user enters data. But I want to know if I can use a variable that is 'hard coded' on the card, that the user is unaware of. UseCannot change Blog Title

There is nowhere to change the blog title. You can change the blog URL but that is making no difference to the text "Enter Your Post Title" am I missing something?Kaizen #203 - Answering Your Questions | Handling API Limits and Error Responses

Hi Everyone, Welcome back to yet another post in the Kaizen Series! We appreciate your keen participation in the 200th milestone celebration of the Kaizen series. We will continue to answer the queries we received through the feedback. When working withHow to verify website ownership with google search console

Hi, I am having a free .in domain provided by Zoho I have created a website on it now I want to verify my ownership to google webmaster. Can you please help me how to do that."SPF record exceed the allowed limit of 10"

Hi, I was wondering if there were a "universal Zoho SPF record" that would allow all my configured Zoho services that email things to meet this limitation ? or do I have to have an entry for mail, com, billing, etc?Animated GIF Images in Chat

I know this seems to be a small feature request but in a recent Cliq update Zoho disabled autoplay for animated GIFs posted in Cliq conversations. We think this was not a good change. In our organization, animated GIFs in a chat play a role in expressingI can't seem to login in to Mail Apps of MacOS /IOS

Hi, i'm having trouble in signing in to mail apps from IOS. It's always come back to wrong passwords. But i already changed my password like 3 times. But still it says wrong credentialsProfile Image Showing Incorrectly in Zoho Mail

Hi everyone, I’m facing a serious issue with Zoho Mail. The profile image showing for my email address is incorrect — it’s not my image. When I send an email to my Gmail account, it displays someone else’s image. This looks very suspicious and can makeI need access to my old email

I need access to my old email bromzeman@zoho.com to verify it for forwarding. I can’t access the inbox to get the confirmation code. Please assist with recovery or forwarding. as you might already know, they made alot of the email addresses to have thatSet Defaults for Email Groups

Is there a way to set defaults for all of the groups that I establish as a moderator? For example, I want every group I establish to have the following settings: 1. Moderator is <user> 2. User is <user>, <user> 3. Notifications for new group turnedLOGS FOR RECEIVED MAIL ( READ DATE & TIME)

In Zoho mail we can extract the read logs of received mails so that we can see when we have read the mail and at what time & we have read it.Domain verification probem

Hello, i use a domain from Namecheap with hosting from Cinfu when i try to verify my domain on zoho i get "TXT Verification failure" i even tried the HTML verification and the code appears but also giving me the verification failure error.Switching to Cloudflare email routing from Zoho Mail

Hello, I'm currently working on migrating from Zoho Mail to Cloudflare's email routing solution. This requires changing MX and TXT records for our custom domain - when we do this, will our users still be able to log into their accounts and access an archivedUn Subscription Button

How can i Add the Un Subscription Button in Zoho mailDocuments unable view and need to downlad

I can't view .doc files in Zoho mail unless I download them, but I can view PDF files without downloading.we encountered a problem while sending your email. please try again later.

Adding and removing people from groups

We're having problems adding people to a group. Apparently Zoho has one email address and will not recognize a different email address.MAIL SEARCH IS NOT WORKING

THIS ISSUE HAS BEEN BUGGING ME SINCE MORNING, PLEASE RESOLVE THIS AT THE EARLIESTURL Parameter on Help Center SIgn in link

Is it possible to add a url parameter to the sign in link on the Help Center?migrating from HelpScout

I am attempting to import a conversation file from helpscout into desk and am receiving size errors. What is the current file size restriction. Does anyone have any tips for a successful migration?Layout Rules Don't Apply To Blueprints

Hi Zoho the conditional layout rules for fields and making fields required don't work well with with Blueprints if those same fields are called DURING a Blueprint. Example. I have field A that is used in layout rule. If value of field A is "1" it is supposed to show and make required field B. If the value to field A is "2" it is supposed to show and make required field C. Now I have a Blueprint that says when last stage moves to "Closed," during the transition, the agent must fill out field A. NowArticle Name Sorting in Zoho Desk Knowledge Base (agent / admin side)

Dear Zoho Desk Support, We are writing to request an enhancement to the Knowledge Base management feature within Zoho Desk. Currently, there is no option to sort articles by their name, which significantly hinders efficient article management, especiallyHow to parse JSON data with SQL in Zoho Analytics?

Hi all, I have a column with JSON data. I want to show this column in a chart, but it is very messy, and no JSON parsing function is supported on Zoho Analytics. data example: {"id": 5, "status": "false", "date": "15/10/22"} what I want to do in SQL isAdd an Equation Field (Or update the Formula Field)

Hi, I would like to be able to have one field as a Text Field with QR Code, and then have multiple Equation/Formula Fields that then take parts of that fields data with LEFT, MID, RIGHT, REGEX, etc. Thanks DanHow to parse column having JSON data using SQL?

We have a daily sync from a PostgreSQL database that brings data into Zoho Analytics. Some of the columns store raw JSON data. We need to build SQL queries on top to parse data from JSON and store them in discrete columns. There is no option for "DataEnable report button based on the current user role

Greetings i have a report that contains action buttons, i want these buttons to appear as enabled only when the current logged in user has a certain role, for example only CEO role users will be able to use this button. but when setting the conditions500 Internal Error In Mail API

I'm getting 500 Internal Error when using mail API. I'm getting this error for this one account, it works fine for other Account IDs which I have in my system.Zoho live chat widget in React Js

I am trying to test Zoho live chat widget code in react js, below is the sample code void(0)} onClick={()=>window.$zoho.salesiq.floatwindow.visible("show")}>LIVE CHAT window.$zoho = window.$zoho || {};window.$zoho.salesiq = window.$zoho.salesiqAre there any plans to add Triggers for Subform edits?

By The Grace of G-D. Hi, How are you? Can you tell me if you have any plans to support subform edit as a workflow trigger? And what about have them trigger an "onChange" client script?Zoho commerce

i am facing issue with order summary emails.i am getting 1 continuous email for order received yesterday and today.ideally 1 email should be received for a particular date ie for 02/08 i should received 1 email from 12.01am till 11.59pm but it is beingFeature Request: Improve Category Page Sorting for "Out of Stock" Products

Hi there, I'm writing to request a new feature that I believe would significantly improve the user experience in my online store. Currently, on category pages, products are sorted by popularity. However, when a popular product goes "Out of Stock," itPOSTMAN - There was an error in evaluating the Pre-request Script:Error: Cannot read properties of undefined (reading 'json')

I am beginning the journey to learn how to use the API for Zoho Sign. I am getting the following error when I try to use postman. To walk you through how I am getting this error... I wanted to start with a simple GET and expand my learning from there.How do i integrate shipstation with zoho inventory

Wanting to set up my own delivery driver in ship station so we can get real time tracking of where the package is but then i want it to automatically update zoho inventory packages/shipments how can i do thisInvalid value passed for salesorder_id

Hi, I am using sales return API, details are given below: API: https://inventory.zoho.com/api/v1/salesreturns?organization_id=700571811 Post Json Data: { "salesreturn_number": "", "date": "2020-11-12", "reason": "Testing from API", "line_items": [ { "item_id":Next Page