Kaizen #131 - Bulk Write for parent-child records using Scala SDK

Hello and welcome back to this week's Kaizen!

Last week, we discussed how to configure and initialize the Zoho CRM Scala SDK. This week, we will be exploring the Bulk Write API and its capabilities. Specifically, we will focus on executing bulk write operations for parent-child records in a single operation, and how to do this using Scala SDK.

Quick Recap of Bulk Write API

Bulk Write API facilitates efficient insertion, updation, or upsertion of large datasets into your CRM account. It operates asynchronously, scheduling jobs to handle data operations. Upon completion, notifications are sent to the specified callback URL or the job status can be checked periodically.

When to use Bulk Write API?

- When scheduling a job to import a massive volume of data.

- When needing to process more than 100 records in a single API call.

- When conducting background processes like migration or initial data sync between Zoho CRM and external services.

Steps to Use Bulk Write API:

- Prepare CSV File: Create a CSV file with field API names as the first row and data in subsequent rows.

- Upload Zip File: Compress the CSV file into a zip format and upload it via a POST request.

- Create Bulk Write Job: Use the uploaded file ID, callback URL, and field API names to create a bulk write job for insertion, update, or upsert operations.

- Check Job Status: Monitor job status through polling or callback methods. Status could be ADDED, INPROGRESS, or COMPLETED.

- Download Result: Retrieve the result of the bulk write job, typically a CSV file with job details, using the provided download URL.

In our previous Kaizen posts - Bulk Write API Part I and Part II, we have extensively covered the Bulk Write API, complete with examples and sample codes for the PHP SDK. We highly recommend referring to those posts before reading further to gain a better understanding of the Bulk Write API.

With the release of our V6 APIs, we have introduced a significant enhancement to our Bulk Write API functionality. Previously, performing bulk write operations required separate API calls for parent and each child module. But with this enhancement, you can now import them all in a single, operation or API call.

Field Mappings for parent-child records in a single API call

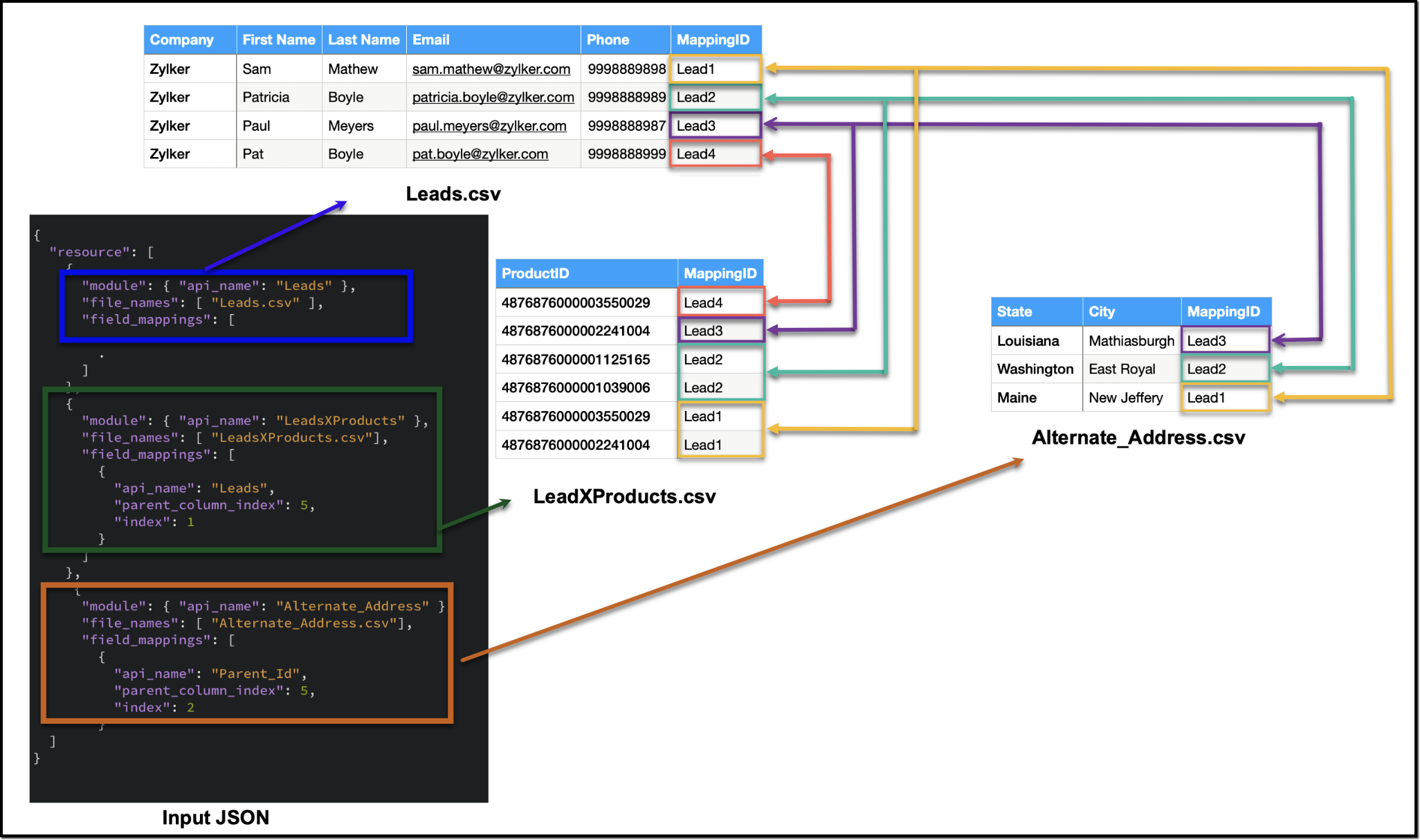

When configuring field mappings for bulk write operations involving parent-child records in a single API call, there are two key aspects to consider: creating the CSV file containing the data and constructing the input JSON for the bulk write job.

Creating the data CSV file:

To set up the data for a bulk write operation involving parent-child records, you need to prepare separate CSV files - one for the parent module records, and one each for each child module records. In these CSV files, appropriate field mappings for both parent and child records need to be defined.

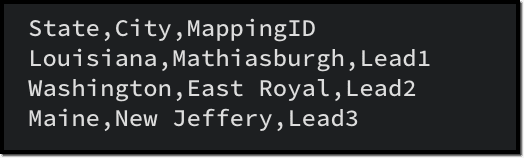

The parent CSV file will contain the parent records, while the child CSV file will contain the child records. To make sure that each child record is linked to its respective parent record, we will add an extra column (MappingID in the image below) to both the parent and child CSV files. This column will have a unique identifier value for each parent record. For each record in the child CSV file, the value in the identifier column should match the value of the identifier of the parent record in the parent CSV file. This ensures an accurate relationship between the parent and child records during the bulk write operation.

Please be aware that the mapping of values is solely dependent on the mappings defined in the input JSON. In this case, the column names in the CSV file serve only as a reference for you. Please refer to the notes section towards the end of this document for more details.

Creating the CSV file remains consistent across all types of child records, and we have already discussed how each child record is linked to its respective parent record in the CSV file. To facilitate the same linkage in the input JSON, we have introduced a new key called parent_column_index. This key assists us in specifying which column in the child module's CSV file contains the identifier or index linking it to the parent record. In the upcoming sections, we will explore preparing the input JSON for various types of child records.

Additionally, since we have multiple CSV files in the zip file, we have introduced another new key named file_names in resources array. file_names helps in correctly mapping each CSV file to its corresponding module.

Ensure that when adding parent and child records in a single operation, the parent module details should be listed first, followed by the child module details in the resource array of the input body.

1. Multiselect Lookup Fields

In scenarios involving multiselect lookup fields, the Bulk Write API now allows for the import of both parent and child records in a single operation.

In the context of multiselect lookup fields, the parent module refers to the primary module where the multiselect lookup field is added. For instance, in our example, consider a multiselect lookup field in the Leads module linking to the Products module.

Parent Module : Leads

Child module : The linking module that establishes the relationship between the parent module and the related records (LeadsXProducts)

Here are the sample files for the "LeadsXProducts" case:

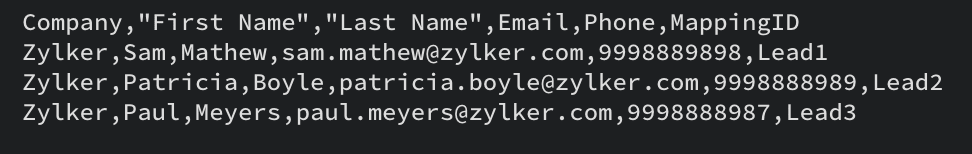

Leads.csv (Parent)

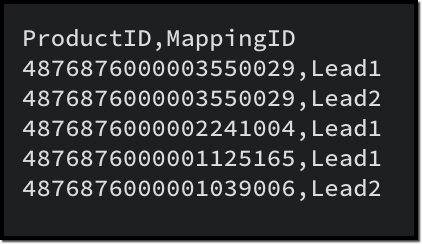

LeadsXProducts.csv (Child)

Given below is a sample input JSON for this bulk write job. Please note that the index of the child linking column should be mapped under the key index, and the index of the parent column index should be mapped under parent_column_index.

To map the child records to their corresponding parent records (linking module), you must use the field API name of the lookup field that links to the parent module. For example, in this case, the API name of the lookup field linking to the Leads module from the LeadsXProducts is Leads.

{ "operation": "insert", "ignore_empty": true, "callback": { "url": "http://www.zoho.com", "method": "post" }, "resource": [ { "type": "data", "module": { "api_name": "Leads" //parent module API name }, "file_id": "4876876000006855001", "file_names": [ "Leads.csv" //parent records CSV file ], "field_mappings": [ // field mappings for the parent record fields { "api_name": "Company", //field API name "index": 0 //index in the CSV file }, { "api_name": "First_Name", "index": 1 }, { "api_name": "Last_Name", "index": 2 }, { "api_name": "Email", "index": 3 }, { "api_name": "Phone", "index": 4 } ] }, { "type": "data", "module": { "api_name": "LeadsXProducts" //child module API name }, "file_id": "4876876000006855001", "file_names": [ "LeadsXProducts.csv" //child records CSV file ], "field_mappings": [ { "api_name": "Products", "find_by": "id", "index": 0 }, { "api_name": "Leads", //field API name of the lookup field in the Linking Module "parent_column_index": 5, // the index of the identifier column in the parent CSV file "index": 1 //index of the identifier column in the child CSV file } ] } ] } |

The following is a sample code snippet for the Scala SDK, to achieve the same functionality. Find the complete code here.

var module = new MinifiedModule() // Create a new instance of MinifiedModule module.setAPIName(Option("Leads")) // Set the API name for the module to "Leads" resourceIns.setModule(Option(module)) resourceIns.setFileId(Option("4876876000006899001")) // Set the file ID for the resource instance resourceIns.setIgnoreEmpty(Option(true)) var filenames = new ArrayBuffer[String] // Create a new ArrayBuffer to store file names filenames.addOne("Leads.csv") resourceIns.setFileNames(filenames) // Set the file names for the resource instance // Create a new ArrayBuffer to store field mappings var fieldMappings: ArrayBuffer[FieldMapping] = new ArrayBuffer[FieldMapping] // Create a new FieldMapping instance for each field var fieldMapping: FieldMapping = null fieldMapping = new FieldMapping fieldMapping.setAPIName(Option("Company")) fieldMapping.setIndex(Option(0)) fieldMappings.addOne(fieldMapping) . . // Set the field mappings for the resource instance resourceIns.setFieldMappings(fieldMappings) resource.addOne(resourceIns) requestWrapper.setResource(resource) resourceIns = new Resource resourceIns.setType(new Choice[String]("data")) module = new MinifiedModule() module.setAPIName(Option("LeadsXProducts")) resourceIns.setModule(Option(module)) resourceIns.setFileId(Option("4876876000006899001")) resourceIns.setIgnoreEmpty(Option(true)) filenames = new ArrayBuffer[String] filenames.addOne("LeadsXProducts.csv") resourceIns.setFileNames(filenames) fieldMappings = new ArrayBuffer[FieldMapping] fieldMapping = new FieldMapping fieldMapping.setAPIName(Option("Products")) fieldMapping.setFindBy(Option("id")) fieldMapping.setIndex(Option(0)) fieldMappings.addOne(fieldMapping) fieldMapping = new FieldMapping fieldMapping.setAPIName(Option("Leads")) //Specify the API name of the lookup filed in the Linking Module fieldMapping.setParentColumnIndex(Option(5)) //Specify the index of the identifier column in the parent CSV file fieldMapping.setIndex(Option(1)) //Specify the index of the identifier column in the child CSV file fieldMappings.addOne(fieldMapping) resourceIns.setFieldMappings(fieldMappings) resource.addOne(resourceIns) requestWrapper.setResource(resource) |

2. Multi-User Lookup fields

In case of multi-user lookup fields, the parent module remains the module where the multi-user field is added. The child module is the lookup module created to facilitate this relationship.

For instance, let's consider a scenario where a multi-user field labeled Referred By is added in the Leads module, linking to the Users module.

Parent module : Leads

Child module : The linking module, LeadsXUsers.

To get more information about the child module, please utilize the Get Modules API. You can get the details of the fields within the child module using the Fields API.

Here is a sample CSV for adding a multi-user field records along with the parent records:

LeadsXUsers.csv

Please ensure that you create a zip file containing the corresponding CSV files, upload it to the platform and then initiate the bulk write job using the file ID. The values for index and parent_column_index will vary based on your specific CSV files.

To create a bulk write job using Create Bulk Write job API, add the following code snippet to your resource array.

{ "type": "data", "module": { "api_name": "Leads_X_Users" // child module }, "file_id": "4876876000006887001", "file_names": [ "LeadsXUsers.csv" //child records CSV file name ], "field_mappings": [ { "api_name": "Referred_User", "find_by": "id", "index": 0 }, { "api_name": "userlookup221_11", //API name of the Leads lookup field in LeadsXUsers module "parent_column_index": 5, // the index of the identifier column in the parent CSV file "index": 1 // the index of the identifier column in the child CSV file } ] } |

To do the same using Scala SDK, add the following code snippet to your code:

resourceIns = new Resource resourceIns.setType(new Choice[String]("data")) module = new MinifiedModule() module.setAPIName(Option("Leads_X_Users")) resourceIns.setModule(Option(module)) resourceIns.setFileId(Option("4876876000006904001")) resourceIns.setIgnoreEmpty(Option(true)) filenames = new ArrayBuffer[String] filenames.addOne("LeadsXUsers.csv") resourceIns.setFileNames(filenames) fieldMappings = new ArrayBuffer[FieldMapping] fieldMapping = new FieldMapping fieldMapping.setAPIName(Option("Referred_User")) fieldMapping.setFindBy(Option("id")) fieldMapping.setIndex(Option(0)) fieldMappings.addOne(fieldMapping) fieldMapping = new FieldMapping fieldMapping.setAPIName(Option("userlookup221_11")) fieldMapping.setParentColumnIndex(Option(5)) fieldMapping.setIndex(Option(1)) fieldMappings.addOne(fieldMapping) resourceIns.setFieldMappings(fieldMappings) resource.addOne(resourceIns) requestWrapper.setResource(resource) |

3. Subform data

To import subform data along with parent records in a single operation, you must include both the parent and subform CSV files within a zip file and upload it. In this context, the parent module refers to the module where the subform is added, and the child module is the subform module.

For instance, consider a subform named Alternate Address in the Leads module, with fields such as City and State.

Parent module : Leads

Child module : Alternate_Address (api name of the Subform module).

In the subform CSV file (Alternate_Address.csv), in addition to the data columns, include a column to denote the linkage to the parent record.

Once the zip file containing both the parent and subform CSV files is prepared, proceed to upload it to initiate the import process. When you create the bulk write job, ensure to specify the appropriate values for index and parent_column_index based on your specific CSV files in the input.

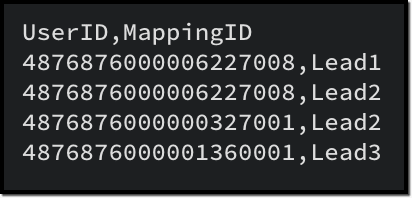

Here is a sample CSV for the subform data, corresponding to the parent CSV provided earlier.

Alternate_Address.csv

To create a bulk write job using Create Bulk Write job API to import the subform data, add the following code snippet to your resource array.

{ "type": "data", "module": { "api_name": "Alternate_Address" //Subform module API name }, "file_id": "4876876000006915001", "file_names": [ "Alternate_Address.csv" //child (subform) records CSV ], "field_mappings": [ { "api_name": "State", "index": 0 }, { "api_name": "City", "index": 1 }, { "api_name": "Parent_Id", //Leads lookup field in the subform module "parent_column_index": 5, "index": 2 } ] } |

To do the same using Scala SDK, add the following code snippet to your code:

resourceIns = new Resource resourceIns.setType(new Choice[String]("data")) module = new MinifiedModule() module.setAPIName(Option("Alternate_Address")) resourceIns.setModule(Option(module)) resourceIns.setFileId(Option("4876876000006920001")) resourceIns.setIgnoreEmpty(Option(true)) filenames = new ArrayBuffer[String] filenames.addOne("Alternate_Address.csv") resourceIns.setFileNames(filenames) fieldMappings = new ArrayBuffer[FieldMapping] fieldMapping = new FieldMapping fieldMapping.setAPIName(Option("State")) fieldMapping.setIndex(Option(0)) fieldMappings.addOne(fieldMapping) fieldMapping = new FieldMapping fieldMapping.setAPIName(Option("City")) fieldMapping.setIndex(Option(1)) fieldMappings.addOne(fieldMapping) fieldMapping = new FieldMapping fieldMapping.setAPIName(Option("Parent_Id")) fieldMapping.setParentColumnIndex(Option(5)) fieldMapping.setIndex(Option(2)) fieldMappings.addOne(fieldMapping) resourceIns.setFieldMappings(fieldMappings) resource.addOne(resourceIns) requestWrapper.setResource(resource) |

4. Line Items

To import line items along with the parent records, an approach similar to handling subform data is used. The parent module is the module housing the parent records, while the child module corresponds to the line item field.

For instance, in the Quotes module, to import product details within the record, the child module should be Quoted_Items.

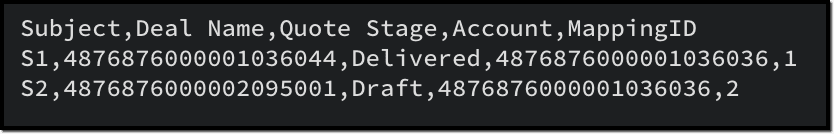

Here is a sample CSV for importing the parent records to the Quotes module:

Quotes.csv

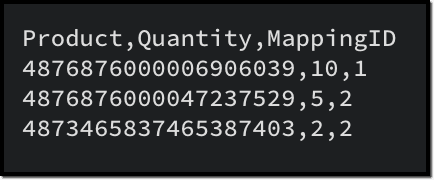

Given below is a sample CSV to add the product details in Quoted Items:

Quoted_Items.csv

Now to create a bulk write job for these records, here is a sample input JSON:

{ "operation": "insert", "ignore_empty": true, "callback": { "url": "http://www.zoho.com", "method": "post" }, "resource": [ { "type": "data", "module": { "api_name": "Quotes" }, "file_id": "4876876000006949001", "file_names": [ "Quotes.csv" ], "field_mappings": [ { "api_name": "Subject", "index": 0 }, { "api_name": "Deal_Name", "find_by" : "id", "index": 1 }, { "api_name": "Quote_Stage", "index": 2 }, { "api_name": "Account_Name", "find_by" : "id", "index": 3 } ] }, { "type": "data", "module": { "api_name": "Quoted_Items" }, "file_id": "4876876000006949001", "file_names": [ "Quoted_Items.csv" ], "field_mappings": [ { "api_name": "Product_Name", "find_by" : "id", "index": 0 }, { "api_name": "Quantity", "index": 1 }, { "api_name": "Parent_Id", "parent_column_index": 4, "index": 2 } ] } ] } |

To do the same using Scala SDK, add this code snippet to your file:

val bulkWriteOperations = new BulkWriteOperations val requestWrapper = new RequestWrapper val callback = new CallBack callback.setUrl(Option("https://www.example.com/callback")) callback.setMethod(new Choice[String]("post")) requestWrapper.setCallback(Option(callback)) requestWrapper.setCharacterEncoding(Option("UTF-8")) requestWrapper.setOperation(new Choice[String]("insert")) requestWrapper.setIgnoreEmpty(Option(true)) val resource = new ArrayBuffer[Resource] var resourceIns = new Resource resourceIns.setType(new Choice[String]("data")) var module = new MinifiedModule() module.setAPIName(Option("Quotes")) resourceIns.setModule(Option(module)) resourceIns.setFileId(Option("4876876000006953001")) resourceIns.setIgnoreEmpty(Option(true)) var filenames = new ArrayBuffer[String] filenames.addOne("Quotes.csv") resourceIns.setFileNames(filenames) var fieldMappings: ArrayBuffer[FieldMapping] = new ArrayBuffer[FieldMapping] var fieldMapping: FieldMapping = null fieldMapping = new FieldMapping fieldMapping.setAPIName(Option("Subject")) fieldMapping.setIndex(Option(0)) fieldMappings.addOne(fieldMapping) fieldMapping = new FieldMapping fieldMapping.setAPIName(Option("Deal_Name")) fieldMapping.setFindBy(Option("id")) fieldMapping.setIndex(Option(1)) fieldMappings.addOne(fieldMapping) fieldMapping = new FieldMapping fieldMapping.setAPIName(Option("Quote_Stage")) fieldMapping.setIndex(Option(2)) fieldMappings.addOne(fieldMapping) fieldMapping = new FieldMapping fieldMapping.setAPIName(Option("Account_Name")) fieldMapping.setIndex(Option(3)) fieldMapping.setFindBy(Option("id")) fieldMappings.addOne(fieldMapping) resourceIns.setFieldMappings(fieldMappings) resource.addOne(resourceIns) requestWrapper.setResource(resource) resourceIns = new Resource resourceIns.setType(new Choice[String]("data")) module = new MinifiedModule() module.setAPIName(Option("Quoted_Items")) resourceIns.setModule(Option(module)) resourceIns.setFileId(Option("4876876000006953001")) resourceIns.setIgnoreEmpty(Option(true)) filenames = new ArrayBuffer[String] filenames.addOne("Quoted_Items.csv") resourceIns.setFileNames(filenames) fieldMappings = new ArrayBuffer[FieldMapping] fieldMapping = new FieldMapping fieldMapping.setAPIName(Option("Product_Name")) fieldMapping.setFindBy(Option("id")) fieldMapping.setIndex(Option(0)) fieldMappings.addOne(fieldMapping) fieldMapping = new FieldMapping fieldMapping.setAPIName(Option("Quantity")) fieldMapping.setIndex(Option(1)) fieldMappings.addOne(fieldMapping) fieldMapping = new FieldMapping fieldMapping.setAPIName(Option("Parent_Id")) fieldMapping.setParentColumnIndex(Option(4)) fieldMapping.setIndex(Option(2)) fieldMappings.addOne(fieldMapping) resourceIns.setFieldMappings(fieldMappings) resource.addOne(resourceIns) requestWrapper.setResource(resource) |

Notes :

- When importing a single CSV file (parent or child module records separately), field_mappings is an optional key in the resource array. If you skip this key, the field mappings must be defined using the column names in the CSV file. In such cases, the column names should correspond to the field API names. Additionally, all columns should be mapped with the correct API names, and there should not be any extra unmapped columns.

- When importing parent and child records in a single API call, field_mappings is a mandatory key.

- The identifier column in the parent and child CSV can have different column names, as the mapping is done based on the input JSON.

Points to remember

- An uploaded file can be used for a single bulk write job only. If you want to retry the operation with the same data, upload the file again to generate a new file ID.

- When adding parent and child records in a single operation, ensure that the parent module comes first, followed by the child module details in the resource array of the input body.

- The parent and all child CSV files should be zipped into a single file and uploaded. You cannot use more than one zip file in a single bulk write job.

- Define appropriate mappings for both parent and child records using the parent_column_index and index key to establish the relationship.

- Utilize the resources > file_names key to map the correct CSV with the appropriate module

- For each parent in the parent records file:

- By default, the limit for Subforms and Line Items is set to 200. While you can configure this limit for subforms in the UI, customization options are not available for Line Items.

- MultiSelect Lookup fields have a maximum limit of 100. If you have more than 100 associations for a MultiSelect Lookup field, you may schedule additional bulk write jobs for the child records alone, importing 100 records at a time.

- The maximum limit for Multi-User Lookup fields is restricted to 10.

We hope that you found this post useful, and you have gained some insights into using the Bulk Write API effectively. If you have any queries, let us know in the comments below, or feel free to send an email to support@zohocrm.com. We would love to hear from you!

Recommended Reads :

- Check out our Kaizen Collection here

- Previous Kaizen : Zoho CRM Scala SDK (V6) - Configuration and Initialization

- Create a Bulk Write Job

- Kaizen #103 - Bulk Write API using PHP SDK (v5) - Part I

- Kaizen #104 - Bulk Write API using PHP SDK (v5) - Part II

Topic Participants

Anu Abraham

Andrea Dalseno

Calum Beck

Jeganprabhu S

Sticky Posts

Kaizen #226: Using ZRC in Client Script

Hello everyone! Welcome to another week of Kaizen. In today's post, lets see what is ZRC (Zoho Request Client) and how we can use ZRC methods in Client Script to get inputs from a Salesperson and update the Lead status with a single button click. In thisKaizen #222 - Client Script Support for Notes Related List

Hello everyone! Welcome to another week of Kaizen. The final Kaizen post of the year 2025 is here! With the new Client Script support for the Notes Related List, you can validate, enrich, and manage notes across modules. In this post, we’ll explore howKaizen #217 - Actions APIs : Tasks

Welcome to another week of Kaizen! In last week's post we discussed Email Notifications APIs which act as the link between your Workflow automations and you. We have discussed how Zylker Cloud Services uses Email Notifications API in their custom dashboard.Kaizen #216 - Actions APIs : Email Notifications

Welcome to another week of Kaizen! For the last three weeks, we have been discussing Zylker's workflows. We successfully updated a dormant workflow, built a new one from the ground up and more. But our work is not finished—these automated processes areKaizen #152 - Client Script Support for the new Canvas Record Forms

Hello everyone! Have you ever wanted to trigger actions on click of a canvas button, icon, or text mandatory forms in Create/Edit and Clone Pages? Have you ever wanted to control how elements behave on the new Canvas Record Forms? This can be achieved

Recent Topics

Optimum CRM setup for new B2B business

Can some advise the most common way to setup Zoho CRM to handle sales for a B2B company? Specifically in how to handle inbound/outbound emails. I have spent hours researching online and can't seem to find an accepted approach, or even a tutorial. I haveScan and Fill CRM Lookup Field

Not sure if there is a reason why this isn't possible or if I'm just missing it. But I would like to be able to use the scan and fill feature on the mobile app to prefill the CRM lookup field and fetch the rest of the data in the form.Customer Management: #2 Organize Customers to Enhance Efficiency

When Ankit started his digital services firm, things felt simple. A client would call, ask for a website or a one-time consultation, Ankit would send an invoice, get paid, and move on. "Just one client, one invoice. Easy.", he thought. Fast forward aZoho Mail and Zoho Flow integration to automatically create ToDo tasks from outbound emails

How do i setup Zoho Mail and Zoho Flow integration to automatically create ToDo tasks from outbound emailsAttachments between Zoho and Clickup, using Flow.

Olá suporte Flow, tudo bem ? Estamos usando o flow para integrar Zoho Desk com o clickup. Não localizamos a opção de integrar anexos entre do zoho Desk para o clickup. Gostaríamos de saber se migrando para o plano pago, teremos suporte para fazer a integraçãoAdding an Account on Zoho Mail Trigger in Zoho Flow

I'm trying to create a flow using the zoho mail trigger "Email Receive". My problem is that when I select this trigger, it only shows one account from the account dropdown. I'm planning to assign it on a different email. How can I add other email adLinnworks

Unless I am missing something, the Linnworks integration is very basic and limited. I have reached out to support but the first response was completely useless and trying to get a reply in a timely manner is very difficult. Surely I should be able toTest data won't load

I am using a Flow to receive orders from WooCommerce and add them to a Zoho Creator app. I recently received an order which failed, and when attempting to test the order I found that it just shows a loading animation and shows up in the history as "queued."AddHour resets the time to 00:00:00 before adding the hour.

Based on the documentation here: https://www.zoho.com/deluge/help/functions/datetime/addhour.html Here's my custom function: string ConvertDateFormat(string inputDate) { // Extract only the date-time part (before the timezone) dateTimePart = inputDate.subString(0,19);WhatsApp Link is not integrating

Hello, I am using zoho flow. when new row added in google sheet it sends email to respected person. In email body I have a text "Share via WhatsApp". behind this text I putted a link. But when the recipient receives email and wants to share my given infoZoho flow - Webhook

If I choose an app as a trigger in Zoho Flow, is it still possible to add a webhook later in the same flow?Zoho Flow + Bigin + Shopify

We are testing Zoho Flow for the first time and want to create a flow based in first purchases. When a client makes his first order, we're going to add the "primeiracompra" (first order) tag to his account in Shopify (it's not efficient, but that's theIs it The Flow? Or is it me?

I want to do some basic level stuff, take two fields from a webhook, create a zsheet from a template using one field with date appended, create a folder using both fields as the name, and put the zsheet into that folder. I was going to elaborate - butHaving problem with data transferring from Google sheet to ZMA

When connecting Google sheet with Zoho marketing automation it is having the email as a mandatory field. Can I change it as non-mandatory field or is there any other way to trasnfer data from google sheet to ZMA. I have leads which we get from whatsapp,Dropbox to Workdrive synchronisation

I want to get all the files and folders from Dropbox to Workdrive and each time a new file or folder is added in dropbox i want it to be available in Workdrive and wise versa. Sync Updates to Files Trigger: "File updated" (Dropbox). Action: "Upload file"Microsoft Planner Task to Service Desk Plus Request - error n4001

Hi there. I'm trying to create a flow that will create a new request in ServiceDesk Plus when a new task is created in Microsoft Planner. I have succesfully connected both Planner and ServiceDesk Plus, and have configured the 'create request' sectionTrailing Space in "Date and time scheduled "

I am trying to use the Zoho Projects - Create event action in a flow. It is failing with the output error as: "Action did not execute successfully due to an unknown error. Contact support for more details." The input is: { "Duration - Minutes": 30, "Project":Project name by deal name; project creation via flow

Hello, I want to create a project in zoho projects using flow by a trigger at the crm. My trigger is the update of a deal (stage). The project name should be the account name/ deal name. But I dont find the solution to it. Can you please give me the answerSlack / Zoho Flow; Repl

I am trying to add a comment in a zoho ticket when someone reply's to a message in a thread. The Message posted to public channel trigger doesn't seem to pick up thread messages. I also cannot use the thread_ts field as it doesn't seem to pull that in.Get Holiday ready with Zoho Mail's Templates

As the holiday season approaches, it’s time to step away from work and unwind. You may not be able to respond to every email or send individual messages to wish everyone holidays greetings—but It is still important to stay connected. How do you send thoughtfulCustomize folder permissions in a Team Folder in a Team Folder via zoho Flow

HI All, on the nth level folder of a team folder I would like to Customize folder permissions when it's created in the flow of Creating folders. That last level I only want to grant access to a specific group, goup ID 201XXXXXXXX. Can you help with aAssociating a Candidate to a Job Listing

Hello, I am trying to use Zoho Forms embedded on my website for candidates to apply for a job opening. I want the form then to tie directly with zoho recruit and have the candidate be automatically inputed into Recruit as well as associated with the specificAutomate reminder emails for events

Hi team, I am trying to automate send event reminders via zoho campaign to my attendees 1 day prior to my scheduled events. I used zoho flow, autoresponder in zoho campaign, as well as I used workflow and automation - but none of these methods are working.Update related module entry Zoho Flow not working with custom module ?

Hi everyone. I am facing an issue here on Zoho Flow. Basically what I am doing is checking when a module entry is being filled in with an Event ID. Event is a custom module that I created. If the field is being filled in I fetch the contact with its IDHow to disable time log on / time log off

Hi We use zoho people just to manage our HR Collaborators. We don't need that each persona check in and out the time tracker. How to disable from the screen that ?Dealing With One-Time Customers on Zoho Books

Hello there! I am trying to figure out a way to handle One-Time customers without having to create multiple accounts for every single one on Zoho Books. I understand that I can create a placeholder account called "Walk-In Customer", for example, but IZoho Flow - Add to Google Calendar from trigger in Zoho Creator App

Hello! New to Zoho Flow, but I believe I have everything setup the way it should be however getting an error saying "Google Calendar says "Bad Request". Any idea where I should start looking? Essentially some background: Zoho Creator app has a triggerEmail authentication

أريد التحقق من البريد الإلكترونيWhat’s New in Zoho Analytics – December 2025

December is a special time of the year to celebrate progress, reflect on what we have achieved, and prepare for what’s ahead! As we wrap up the year, this month’s updates focus on refining experiences, strengthening analytics workflows, and setting theMarketing Tip #12: Earn trust with payment badges and clear policies

Online shoppers want to know they can trust your store. Displaying trust signals such as SSL-secure payment badges, return and refund policies, and verified reviews shows visitors that your store is reliable. These visual cues can turn hesitation intoThe improved portal experience: Introducing the template view for inventory modules, enhanced configurations, and PDF export support

Availability: Open for all DCs. Editions: All Hello everyone, You can now achieve a seamless, brand-aligned portal experience with our enhanced configuration options and the new template view for inventory modules. Your clients will now be able to viewZoho Analytics Bulk Api Import json Data

HI, I’m trying to bulk-update rows in Zoho Analytics, and below are the request and response details. I’d like to understand the required parameters for constructing a bulk API request to import or update data in a table using Deluge. Any guidance onProject Management Bulletin: December, 2025

The holiday cheer is in the air and it’s time to reflect on the year that was. At Zoho PM Suite, we've been working behind the scenes on something huge and exciting all year and now we are almost ready—with just a bit of confetti—for our grand releaseInventory batch details

Hi there, I'm trying to get the batch details of an item, here's what I've done so far. I've sent cUrl request to the below endpoint and I get a successful response. Within in the response I find the "warehouses" property which correctly lists all theAuto check out after shift complete

i'm just stuck here right now, i wanna know how to do this thing, now tell me, how can i configure a custom function that runs after complete shift time if employee forget to check-out ?How to create a flow that creates tickets automaticaly everyday based on specific times

Hi guys Does anyone know how to create a flow that will create tickets automaticaly in ZOHO Desk when a certain time is reached. Im havin a hard time configuring a flow that will create tickets automaticaly everyday during specific hours of the day ForZOHO FLOW - ZOHO CREATOR - ZOHO WRITER : Get Related records

Bonjour, J'ai besoin que vous m'ajoutiez la solution "Get related Records" dans la liste de choix de zoho creator (sous Zoho flow). En effet, j'ai besoin de récupérer les champs d'un sous formulaire pour l'ajouter à l'impression de mon document. MerWill zoho thrive be integrated with Zoho Books?

titleConnecting email for each department in ZohoDesk

Hi! Could someone help me to go through connecting emails for each department?How do I trigger a Flow based on a campaign response?

Is there a way to trgiider a Zoho Flow based upon a lead opening an email sent via Zoho Campaigns? I see that the data is recorded in the 'Campaigns' section of Zoho CRM under 'Member Status' and I want to trgigger a flow based upon that record changing.Next Page