Kaizen #131 - Bulk Write for parent-child records using Scala SDK

Hello and welcome back to this week's Kaizen!

Last week, we discussed how to configure and initialize the Zoho CRM Scala SDK. This week, we will be exploring the Bulk Write API and its capabilities. Specifically, we will focus on executing bulk write operations for parent-child records in a single operation, and how to do this using Scala SDK.

Quick Recap of Bulk Write API

Bulk Write API facilitates efficient insertion, updation, or upsertion of large datasets into your CRM account. It operates asynchronously, scheduling jobs to handle data operations. Upon completion, notifications are sent to the specified callback URL or the job status can be checked periodically.

When to use Bulk Write API?

- When scheduling a job to import a massive volume of data.

- When needing to process more than 100 records in a single API call.

- When conducting background processes like migration or initial data sync between Zoho CRM and external services.

Steps to Use Bulk Write API:

- Prepare CSV File: Create a CSV file with field API names as the first row and data in subsequent rows.

- Upload Zip File: Compress the CSV file into a zip format and upload it via a POST request.

- Create Bulk Write Job: Use the uploaded file ID, callback URL, and field API names to create a bulk write job for insertion, update, or upsert operations.

- Check Job Status: Monitor job status through polling or callback methods. Status could be ADDED, INPROGRESS, or COMPLETED.

- Download Result: Retrieve the result of the bulk write job, typically a CSV file with job details, using the provided download URL.

In our previous Kaizen posts - Bulk Write API Part I and Part II, we have extensively covered the Bulk Write API, complete with examples and sample codes for the PHP SDK. We highly recommend referring to those posts before reading further to gain a better understanding of the Bulk Write API.

With the release of our V6 APIs, we have introduced a significant enhancement to our Bulk Write API functionality. Previously, performing bulk write operations required separate API calls for parent and each child module. But with this enhancement, you can now import them all in a single, operation or API call.

Field Mappings for parent-child records in a single API call

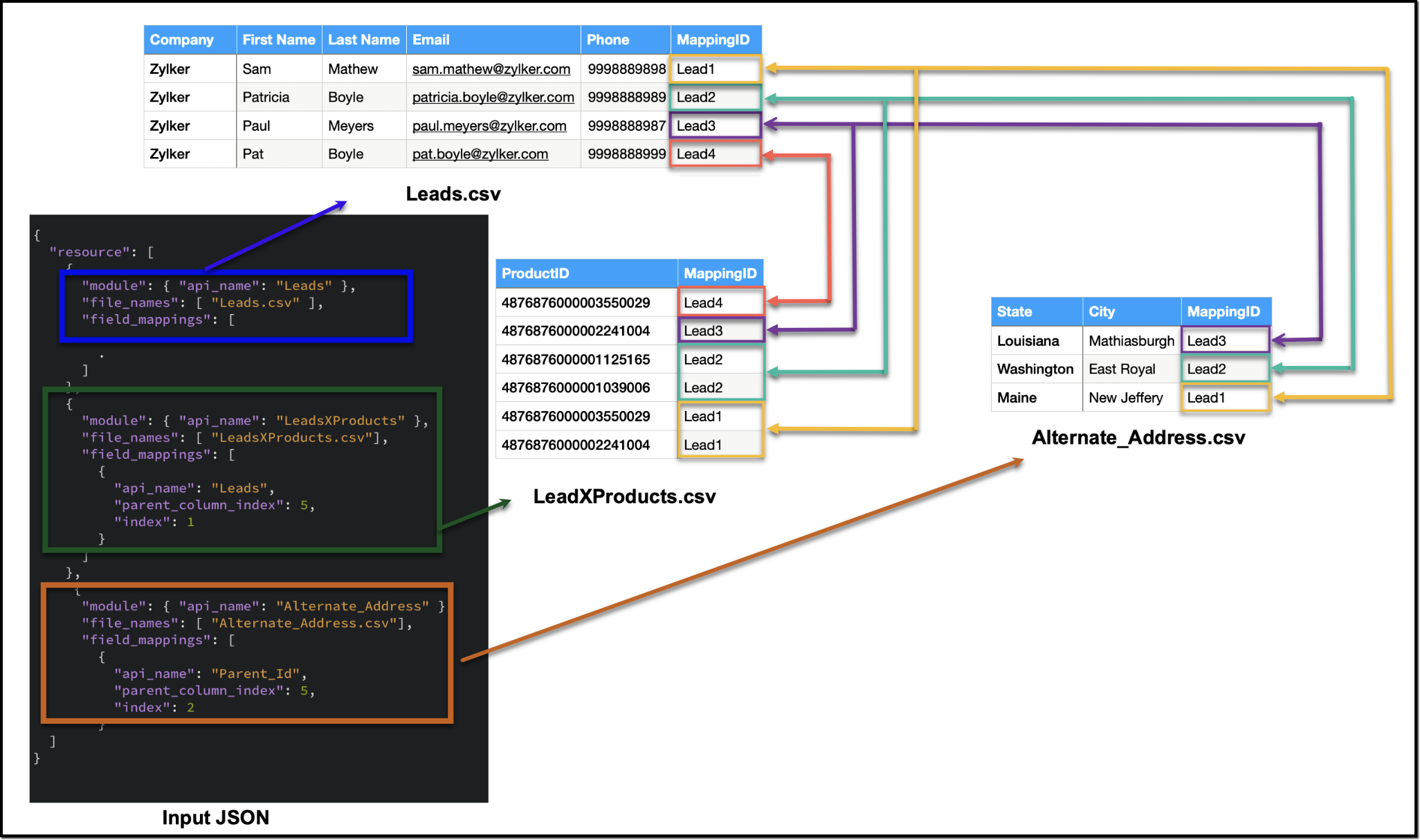

When configuring field mappings for bulk write operations involving parent-child records in a single API call, there are two key aspects to consider: creating the CSV file containing the data and constructing the input JSON for the bulk write job.

Creating the data CSV file:

To set up the data for a bulk write operation involving parent-child records, you need to prepare separate CSV files - one for the parent module records, and one each for each child module records. In these CSV files, appropriate field mappings for both parent and child records need to be defined.

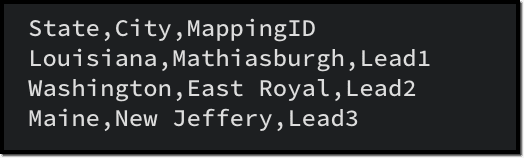

The parent CSV file will contain the parent records, while the child CSV file will contain the child records. To make sure that each child record is linked to its respective parent record, we will add an extra column (MappingID in the image below) to both the parent and child CSV files. This column will have a unique identifier value for each parent record. For each record in the child CSV file, the value in the identifier column should match the value of the identifier of the parent record in the parent CSV file. This ensures an accurate relationship between the parent and child records during the bulk write operation.

Please be aware that the mapping of values is solely dependent on the mappings defined in the input JSON. In this case, the column names in the CSV file serve only as a reference for you. Please refer to the notes section towards the end of this document for more details.

Creating the CSV file remains consistent across all types of child records, and we have already discussed how each child record is linked to its respective parent record in the CSV file. To facilitate the same linkage in the input JSON, we have introduced a new key called parent_column_index. This key assists us in specifying which column in the child module's CSV file contains the identifier or index linking it to the parent record. In the upcoming sections, we will explore preparing the input JSON for various types of child records.

Additionally, since we have multiple CSV files in the zip file, we have introduced another new key named file_names in resources array. file_names helps in correctly mapping each CSV file to its corresponding module.

Ensure that when adding parent and child records in a single operation, the parent module details should be listed first, followed by the child module details in the resource array of the input body.

1. Multiselect Lookup Fields

In scenarios involving multiselect lookup fields, the Bulk Write API now allows for the import of both parent and child records in a single operation.

In the context of multiselect lookup fields, the parent module refers to the primary module where the multiselect lookup field is added. For instance, in our example, consider a multiselect lookup field in the Leads module linking to the Products module.

Parent Module : Leads

Child module : The linking module that establishes the relationship between the parent module and the related records (LeadsXProducts)

Here are the sample files for the "LeadsXProducts" case:

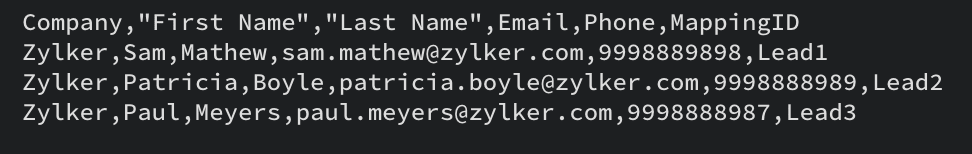

Leads.csv (Parent)

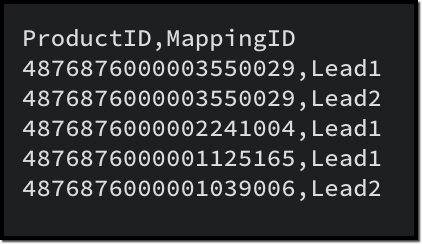

LeadsXProducts.csv (Child)

Given below is a sample input JSON for this bulk write job. Please note that the index of the child linking column should be mapped under the key index, and the index of the parent column index should be mapped under parent_column_index.

To map the child records to their corresponding parent records (linking module), you must use the field API name of the lookup field that links to the parent module. For example, in this case, the API name of the lookup field linking to the Leads module from the LeadsXProducts is Leads.

{ "operation": "insert", "ignore_empty": true, "callback": { "url": "http://www.zoho.com", "method": "post" }, "resource": [ { "type": "data", "module": { "api_name": "Leads" //parent module API name }, "file_id": "4876876000006855001", "file_names": [ "Leads.csv" //parent records CSV file ], "field_mappings": [ // field mappings for the parent record fields { "api_name": "Company", //field API name "index": 0 //index in the CSV file }, { "api_name": "First_Name", "index": 1 }, { "api_name": "Last_Name", "index": 2 }, { "api_name": "Email", "index": 3 }, { "api_name": "Phone", "index": 4 } ] }, { "type": "data", "module": { "api_name": "LeadsXProducts" //child module API name }, "file_id": "4876876000006855001", "file_names": [ "LeadsXProducts.csv" //child records CSV file ], "field_mappings": [ { "api_name": "Products", "find_by": "id", "index": 0 }, { "api_name": "Leads", //field API name of the lookup field in the Linking Module "parent_column_index": 5, // the index of the identifier column in the parent CSV file "index": 1 //index of the identifier column in the child CSV file } ] } ] } |

The following is a sample code snippet for the Scala SDK, to achieve the same functionality. Find the complete code here.

var module = new MinifiedModule() // Create a new instance of MinifiedModule module.setAPIName(Option("Leads")) // Set the API name for the module to "Leads" resourceIns.setModule(Option(module)) resourceIns.setFileId(Option("4876876000006899001")) // Set the file ID for the resource instance resourceIns.setIgnoreEmpty(Option(true)) var filenames = new ArrayBuffer[String] // Create a new ArrayBuffer to store file names filenames.addOne("Leads.csv") resourceIns.setFileNames(filenames) // Set the file names for the resource instance // Create a new ArrayBuffer to store field mappings var fieldMappings: ArrayBuffer[FieldMapping] = new ArrayBuffer[FieldMapping] // Create a new FieldMapping instance for each field var fieldMapping: FieldMapping = null fieldMapping = new FieldMapping fieldMapping.setAPIName(Option("Company")) fieldMapping.setIndex(Option(0)) fieldMappings.addOne(fieldMapping) . . // Set the field mappings for the resource instance resourceIns.setFieldMappings(fieldMappings) resource.addOne(resourceIns) requestWrapper.setResource(resource) resourceIns = new Resource resourceIns.setType(new Choice[String]("data")) module = new MinifiedModule() module.setAPIName(Option("LeadsXProducts")) resourceIns.setModule(Option(module)) resourceIns.setFileId(Option("4876876000006899001")) resourceIns.setIgnoreEmpty(Option(true)) filenames = new ArrayBuffer[String] filenames.addOne("LeadsXProducts.csv") resourceIns.setFileNames(filenames) fieldMappings = new ArrayBuffer[FieldMapping] fieldMapping = new FieldMapping fieldMapping.setAPIName(Option("Products")) fieldMapping.setFindBy(Option("id")) fieldMapping.setIndex(Option(0)) fieldMappings.addOne(fieldMapping) fieldMapping = new FieldMapping fieldMapping.setAPIName(Option("Leads")) //Specify the API name of the lookup filed in the Linking Module fieldMapping.setParentColumnIndex(Option(5)) //Specify the index of the identifier column in the parent CSV file fieldMapping.setIndex(Option(1)) //Specify the index of the identifier column in the child CSV file fieldMappings.addOne(fieldMapping) resourceIns.setFieldMappings(fieldMappings) resource.addOne(resourceIns) requestWrapper.setResource(resource) |

2. Multi-User Lookup fields

In case of multi-user lookup fields, the parent module remains the module where the multi-user field is added. The child module is the lookup module created to facilitate this relationship.

For instance, let's consider a scenario where a multi-user field labeled Referred By is added in the Leads module, linking to the Users module.

Parent module : Leads

Child module : The linking module, LeadsXUsers.

To get more information about the child module, please utilize the Get Modules API. You can get the details of the fields within the child module using the Fields API.

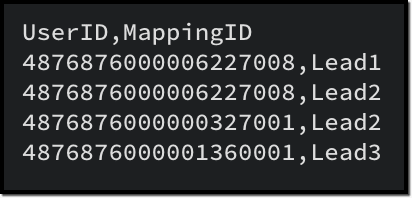

Here is a sample CSV for adding a multi-user field records along with the parent records:

LeadsXUsers.csv

Please ensure that you create a zip file containing the corresponding CSV files, upload it to the platform and then initiate the bulk write job using the file ID. The values for index and parent_column_index will vary based on your specific CSV files.

To create a bulk write job using Create Bulk Write job API, add the following code snippet to your resource array.

{ "type": "data", "module": { "api_name": "Leads_X_Users" // child module }, "file_id": "4876876000006887001", "file_names": [ "LeadsXUsers.csv" //child records CSV file name ], "field_mappings": [ { "api_name": "Referred_User", "find_by": "id", "index": 0 }, { "api_name": "userlookup221_11", //API name of the Leads lookup field in LeadsXUsers module "parent_column_index": 5, // the index of the identifier column in the parent CSV file "index": 1 // the index of the identifier column in the child CSV file } ] } |

To do the same using Scala SDK, add the following code snippet to your code:

resourceIns = new Resource resourceIns.setType(new Choice[String]("data")) module = new MinifiedModule() module.setAPIName(Option("Leads_X_Users")) resourceIns.setModule(Option(module)) resourceIns.setFileId(Option("4876876000006904001")) resourceIns.setIgnoreEmpty(Option(true)) filenames = new ArrayBuffer[String] filenames.addOne("LeadsXUsers.csv") resourceIns.setFileNames(filenames) fieldMappings = new ArrayBuffer[FieldMapping] fieldMapping = new FieldMapping fieldMapping.setAPIName(Option("Referred_User")) fieldMapping.setFindBy(Option("id")) fieldMapping.setIndex(Option(0)) fieldMappings.addOne(fieldMapping) fieldMapping = new FieldMapping fieldMapping.setAPIName(Option("userlookup221_11")) fieldMapping.setParentColumnIndex(Option(5)) fieldMapping.setIndex(Option(1)) fieldMappings.addOne(fieldMapping) resourceIns.setFieldMappings(fieldMappings) resource.addOne(resourceIns) requestWrapper.setResource(resource) |

3. Subform data

To import subform data along with parent records in a single operation, you must include both the parent and subform CSV files within a zip file and upload it. In this context, the parent module refers to the module where the subform is added, and the child module is the subform module.

For instance, consider a subform named Alternate Address in the Leads module, with fields such as City and State.

Parent module : Leads

Child module : Alternate_Address (api name of the Subform module).

In the subform CSV file (Alternate_Address.csv), in addition to the data columns, include a column to denote the linkage to the parent record.

Once the zip file containing both the parent and subform CSV files is prepared, proceed to upload it to initiate the import process. When you create the bulk write job, ensure to specify the appropriate values for index and parent_column_index based on your specific CSV files in the input.

Here is a sample CSV for the subform data, corresponding to the parent CSV provided earlier.

Alternate_Address.csv

To create a bulk write job using Create Bulk Write job API to import the subform data, add the following code snippet to your resource array.

{ "type": "data", "module": { "api_name": "Alternate_Address" //Subform module API name }, "file_id": "4876876000006915001", "file_names": [ "Alternate_Address.csv" //child (subform) records CSV ], "field_mappings": [ { "api_name": "State", "index": 0 }, { "api_name": "City", "index": 1 }, { "api_name": "Parent_Id", //Leads lookup field in the subform module "parent_column_index": 5, "index": 2 } ] } |

To do the same using Scala SDK, add the following code snippet to your code:

resourceIns = new Resource resourceIns.setType(new Choice[String]("data")) module = new MinifiedModule() module.setAPIName(Option("Alternate_Address")) resourceIns.setModule(Option(module)) resourceIns.setFileId(Option("4876876000006920001")) resourceIns.setIgnoreEmpty(Option(true)) filenames = new ArrayBuffer[String] filenames.addOne("Alternate_Address.csv") resourceIns.setFileNames(filenames) fieldMappings = new ArrayBuffer[FieldMapping] fieldMapping = new FieldMapping fieldMapping.setAPIName(Option("State")) fieldMapping.setIndex(Option(0)) fieldMappings.addOne(fieldMapping) fieldMapping = new FieldMapping fieldMapping.setAPIName(Option("City")) fieldMapping.setIndex(Option(1)) fieldMappings.addOne(fieldMapping) fieldMapping = new FieldMapping fieldMapping.setAPIName(Option("Parent_Id")) fieldMapping.setParentColumnIndex(Option(5)) fieldMapping.setIndex(Option(2)) fieldMappings.addOne(fieldMapping) resourceIns.setFieldMappings(fieldMappings) resource.addOne(resourceIns) requestWrapper.setResource(resource) |

4. Line Items

To import line items along with the parent records, an approach similar to handling subform data is used. The parent module is the module housing the parent records, while the child module corresponds to the line item field.

For instance, in the Quotes module, to import product details within the record, the child module should be Quoted_Items.

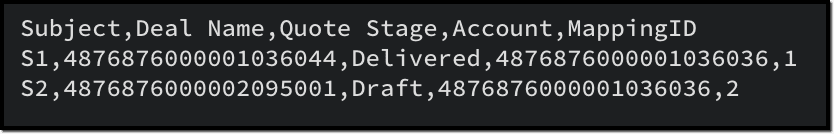

Here is a sample CSV for importing the parent records to the Quotes module:

Quotes.csv

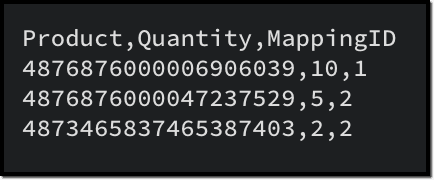

Given below is a sample CSV to add the product details in Quoted Items:

Quoted_Items.csv

Now to create a bulk write job for these records, here is a sample input JSON:

{ "operation": "insert", "ignore_empty": true, "callback": { "url": "http://www.zoho.com", "method": "post" }, "resource": [ { "type": "data", "module": { "api_name": "Quotes" }, "file_id": "4876876000006949001", "file_names": [ "Quotes.csv" ], "field_mappings": [ { "api_name": "Subject", "index": 0 }, { "api_name": "Deal_Name", "find_by" : "id", "index": 1 }, { "api_name": "Quote_Stage", "index": 2 }, { "api_name": "Account_Name", "find_by" : "id", "index": 3 } ] }, { "type": "data", "module": { "api_name": "Quoted_Items" }, "file_id": "4876876000006949001", "file_names": [ "Quoted_Items.csv" ], "field_mappings": [ { "api_name": "Product_Name", "find_by" : "id", "index": 0 }, { "api_name": "Quantity", "index": 1 }, { "api_name": "Parent_Id", "parent_column_index": 4, "index": 2 } ] } ] } |

To do the same using Scala SDK, add this code snippet to your file:

val bulkWriteOperations = new BulkWriteOperations val requestWrapper = new RequestWrapper val callback = new CallBack callback.setUrl(Option("https://www.example.com/callback")) callback.setMethod(new Choice[String]("post")) requestWrapper.setCallback(Option(callback)) requestWrapper.setCharacterEncoding(Option("UTF-8")) requestWrapper.setOperation(new Choice[String]("insert")) requestWrapper.setIgnoreEmpty(Option(true)) val resource = new ArrayBuffer[Resource] var resourceIns = new Resource resourceIns.setType(new Choice[String]("data")) var module = new MinifiedModule() module.setAPIName(Option("Quotes")) resourceIns.setModule(Option(module)) resourceIns.setFileId(Option("4876876000006953001")) resourceIns.setIgnoreEmpty(Option(true)) var filenames = new ArrayBuffer[String] filenames.addOne("Quotes.csv") resourceIns.setFileNames(filenames) var fieldMappings: ArrayBuffer[FieldMapping] = new ArrayBuffer[FieldMapping] var fieldMapping: FieldMapping = null fieldMapping = new FieldMapping fieldMapping.setAPIName(Option("Subject")) fieldMapping.setIndex(Option(0)) fieldMappings.addOne(fieldMapping) fieldMapping = new FieldMapping fieldMapping.setAPIName(Option("Deal_Name")) fieldMapping.setFindBy(Option("id")) fieldMapping.setIndex(Option(1)) fieldMappings.addOne(fieldMapping) fieldMapping = new FieldMapping fieldMapping.setAPIName(Option("Quote_Stage")) fieldMapping.setIndex(Option(2)) fieldMappings.addOne(fieldMapping) fieldMapping = new FieldMapping fieldMapping.setAPIName(Option("Account_Name")) fieldMapping.setIndex(Option(3)) fieldMapping.setFindBy(Option("id")) fieldMappings.addOne(fieldMapping) resourceIns.setFieldMappings(fieldMappings) resource.addOne(resourceIns) requestWrapper.setResource(resource) resourceIns = new Resource resourceIns.setType(new Choice[String]("data")) module = new MinifiedModule() module.setAPIName(Option("Quoted_Items")) resourceIns.setModule(Option(module)) resourceIns.setFileId(Option("4876876000006953001")) resourceIns.setIgnoreEmpty(Option(true)) filenames = new ArrayBuffer[String] filenames.addOne("Quoted_Items.csv") resourceIns.setFileNames(filenames) fieldMappings = new ArrayBuffer[FieldMapping] fieldMapping = new FieldMapping fieldMapping.setAPIName(Option("Product_Name")) fieldMapping.setFindBy(Option("id")) fieldMapping.setIndex(Option(0)) fieldMappings.addOne(fieldMapping) fieldMapping = new FieldMapping fieldMapping.setAPIName(Option("Quantity")) fieldMapping.setIndex(Option(1)) fieldMappings.addOne(fieldMapping) fieldMapping = new FieldMapping fieldMapping.setAPIName(Option("Parent_Id")) fieldMapping.setParentColumnIndex(Option(4)) fieldMapping.setIndex(Option(2)) fieldMappings.addOne(fieldMapping) resourceIns.setFieldMappings(fieldMappings) resource.addOne(resourceIns) requestWrapper.setResource(resource) |

Notes :

- When importing a single CSV file (parent or child module records separately), field_mappings is an optional key in the resource array. If you skip this key, the field mappings must be defined using the column names in the CSV file. In such cases, the column names should correspond to the field API names. Additionally, all columns should be mapped with the correct API names, and there should not be any extra unmapped columns.

- When importing parent and child records in a single API call, field_mappings is a mandatory key.

- The identifier column in the parent and child CSV can have different column names, as the mapping is done based on the input JSON.

Points to remember

- An uploaded file can be used for a single bulk write job only. If you want to retry the operation with the same data, upload the file again to generate a new file ID.

- When adding parent and child records in a single operation, ensure that the parent module comes first, followed by the child module details in the resource array of the input body.

- The parent and all child CSV files should be zipped into a single file and uploaded. You cannot use more than one zip file in a single bulk write job.

- Define appropriate mappings for both parent and child records using the parent_column_index and index key to establish the relationship.

- Utilize the resources > file_names key to map the correct CSV with the appropriate module

- For each parent in the parent records file:

- By default, the limit for Subforms and Line Items is set to 200. While you can configure this limit for subforms in the UI, customization options are not available for Line Items.

- MultiSelect Lookup fields have a maximum limit of 100. If you have more than 100 associations for a MultiSelect Lookup field, you may schedule additional bulk write jobs for the child records alone, importing 100 records at a time.

- The maximum limit for Multi-User Lookup fields is restricted to 10.

We hope that you found this post useful, and you have gained some insights into using the Bulk Write API effectively. If you have any queries, let us know in the comments below, or feel free to send an email to support@zohocrm.com. We would love to hear from you!

Recommended Reads :

- Check out our Kaizen Collection here

- Previous Kaizen : Zoho CRM Scala SDK (V6) - Configuration and Initialization

- Create a Bulk Write Job

- Kaizen #103 - Bulk Write API using PHP SDK (v5) - Part I

- Kaizen #104 - Bulk Write API using PHP SDK (v5) - Part II

Topic Participants

Anu Abraham

Andrea Dalseno

Calum Beck

Jeganprabhu S

Sticky Posts

Kaizen #198: Using Client Script for Custom Validation in Blueprint

Nearing 200th Kaizen Post – 1 More to the Big Two-Oh-Oh! Do you have any questions, suggestions, or topics you would like us to cover in future posts? Your insights and suggestions help us shape future content and make this series better for everyone.Kaizen #226: Using ZRC in Client Script

Hello everyone! Welcome to another week of Kaizen. In today's post, lets see what is ZRC (Zoho Request Client) and how we can use ZRC methods in Client Script to get inputs from a Salesperson and update the Lead status with a single button click. In thisKaizen #222 - Client Script Support for Notes Related List

Hello everyone! Welcome to another week of Kaizen. The final Kaizen post of the year 2025 is here! With the new Client Script support for the Notes Related List, you can validate, enrich, and manage notes across modules. In this post, we’ll explore howKaizen #217 - Actions APIs : Tasks

Welcome to another week of Kaizen! In last week's post we discussed Email Notifications APIs which act as the link between your Workflow automations and you. We have discussed how Zylker Cloud Services uses Email Notifications API in their custom dashboard.Kaizen #216 - Actions APIs : Email Notifications

Welcome to another week of Kaizen! For the last three weeks, we have been discussing Zylker's workflows. We successfully updated a dormant workflow, built a new one from the ground up and more. But our work is not finished—these automated processes are

Recent Topics

Zoho Expense Import Reports Won't Work Because Default Accounts Already Exist

Im trying to import reports from another Zoho expense account to mine and im getting errors that won't allow the import to happen The account name that you've entered 'Ground Transportation' already exists. Enter another name for the account and try again.zInactive License for free account.

I recently upgraded my Cliq subscription not my team (on the free version), are unable to login to their accounts. The error message received is Inactive License Looks like you have not been covered under the current free plan of users. Please contactSyncing zoho books into zoho crm

I was wondering how I can use zoho books in crm as I have been using them separately and would like to sync the two. Is this possible and if so, how? ThanksAnnouncing new features in Trident for Mac (1.34.0)

Hello everyone! We’re excited to introduce the latest updates to Trident, which are designed to take workplace communication to the next level. Let’s take a quick look at what’s new. Connect with customers using Zoho Voice integration. You can now easilyThe Social Wall: January 2026

Hello everyone, We’re back with the first edition of The Social Wall of 2026. There’s a lot planned for the year ahead, and we’re starting with a few useful features and improvements released in January to help you get started. Create a GBP in SocialHow to block whole domain?

I am getting at least 50-75sometimes over 100 spams emails a day. I see a lot of the spam is coming from .eu domains. I would like to block /reject all email coming for the .eu domain. I do not have any need for email from .EU domains. Why won't the BlackListHow Zoho Contracts Makes Negotiations Clear, Secure, and Transparent

Negotiation is one of the most critical—and often most chaotic—stages of the contract lifecycle. Multiple stakeholders review the same document, propose changes, debate terms, and exchange feedback. Without the right tools, this collaborative processError: Invalid Element tax_override_preference

In both Books and Inventory, we're getting the following error whenever we try to enter any Bill: I think this is a bug. Even cloning an old bill (that obviously was entered just fine), this error occurs.Assign Income to Project Without Invoice

Hello, Fairly new user here so apologies if there is a really obvious solution here that I am just missing... I have hundreds of small deposits into a bank account that I want to assign to a project but do not want to have to create an invoice every timeHow to Print the Data Model Zoho CRM

I have created the data model in Zoho CRM and I want the ability to Print this. How do we do this please? I want the diagram exported to a PDF. There doesnt appear to be an option to do this. Thanks Andrewour customers have difficult to understand the Statements

our costumers have big problems to understand Zohos Statements. we need a text box after the payment number to explain what the payments are for. Is it possible to develop a version of the Statement with that kind of box and if so whu can do itHow to track a list of ALL the items that ONE customer bought?

Hello, I am interested in getting a list of all the items that only ONE of my customers bought, and the invoices are what show up instead. It's very tedious to go through every single invoice when we sell a lot of items to this customer. Surely thereExchange Rate Updates

Hi, It would be great that when you work with multiple currencies, the exchange rate updates automagically every day (as seen on Zoho Books) or at least that when you create/update an opportunity the exchange rate could be manually updated, or maybe both!Locked Out of Super Admin Account

I'm locked out of my Super Admin account and the external e-mail is no longer associated with it. There seems to be a problem during set up, the system ought to ask to assign a new password instead of using Google accounts. Please help me get back access.Emails are going to notification folder and not in inbox

emails are going to notification folder and not into inbox550 5.4.6 Unusual sending activity detected. Please try after sometime

Hi, I am receiving this error message when trying to send my emails. The only reason I can think why this is happening is my previous two emails were bounced back to me due to a non working mailbox error. I have followed the online links for unblockingProjects Home Customization

Hello! We've been in Zoho One since July of last year, and my team has started providing feedback on what they'd like to do. Their latest wish is that they could have more control over the Projects Home content. For example, they want a card that showsIMAP search support

Does Zoho Mail support IMAP search? https://www.rfc-editor.org/rfc/rfc9051.html#name-search-command TEXT <string> Messages that contain the specified string in the header (including MIME header fields) or body of the message. Servers are allowed to implementPerformance is degrading

We have used Mail and Cliq for about three years now. I used to use both on the browser. Both have, over the past 6 months, had a severe degradation in performance. I switched to desktop email, which appeared to improve things somewhat, although initialCannot reorder fields in Page Layout in Expenses and Purchase Requests

It is very inconvenient that the custom fields in Page Layout cannot be re-ordered. The only way is to remove the fields and re-create them; however, it is impractical. This would affect the reports and dashboards we are having. Not able to re-order thebouncing emails

My recurring invoices have bounced backProbable Scam / Phising attempt from email pretending to be Zoho

I think this is a scam email right? It says "zohCworkplace.com". I'm on the Mail Free plan The link in the CTA button seems to go to a redirect. Just wanted to bring it up to the security team.Feature Request: Ability to set Default Custom Filters and apply them via URL/Deluge

I've discovered a significant gap in how Zoho Creator handles Custom Filters for reports, and I'm hoping the Zoho team can address this in a future update. This limitation has been raised before and continues to be requested, but remains unresolved. TheEmail Address Search in "To" Field Search is broken, Zoho refuses to fix it

Typing a part of the email address string other than the beginning of the string does NOT work, I kindly urge Zoho to fix this. Let's say you remember writing an email so someone called "smith" from company "corp.com", but can't remember their first name,Emails not being received by @hotmail.com, @outlook.com and a few others

When I try to send emails from zoho mail to people with email addresses ending @outlook.com and @hotmail.com (and a few others), I get a 'delayed' automatic email and then a few hours later, an 'undelivered' automatic email. This has started a few monthsscroll bar for far left of screen

I am unable to even see the scroll bar to the right of "inbox" etc on the left; it is stuck at "streams" and I can't get to inbox or anything else. It would help if it could be made a lighter color as the black or dark grey can't bee seen.OUt of office every friday

Hi, I tryed to configure my out of office, because i'm not working or emailing on fridays. But when i select only friday as unavailable day, the out of office still sets for the whole week. What am I doing wrong?Signature line

How do I set signature line in emailZoho email

I cannot send email to mail.ru【参加無料】東京 Zoho ユーザ交流会 NEXUS ー CRMで始めるマーケティング事例 / AI活用法(Zia Agents)

ユーザーの皆さま、こんにちは。コミュニティチームの藤澤です。 3月27日(金)に東京、新橋で東京 Zoho ユーザー交流会 NEXUS を開催します! 昨年度までより、さらにパワーアップして戻ってきました! ユーザー活用事例は、2人のユーザーさんからお話しいただきます。Zoho サービスの活用の幅を広げたい方や、他のユーザーの利用法を気軽に知りたい方など、多くの方にとって学びのあるセッションになること間違いなしです✨ また今年は、これまで以上に、AI機能にも焦点を当てて行く予定です。 初回として、Zoho社員からZohoIssue Exporting Data – CSRF Token Invalid Error

Dear Zoho Team, We are experiencing an issue when exporting data from our Analytics workspace. Whenever we attempt to export data from our analytical pool, the system displays the following alert message: Alert Message: The CSRF token is invalid. It couldDeluge Learning Series – Client functions in Deluge | January 2026

We’re excited to kick-start the first session of the 2026 Deluge Learning Series (DLS) with Client functions in Deluge. For those who are new to DLS, here’s a quick overview of what the series is all about: The Deluge Learning Series takes place on theZoho Mail 505 error I can not send email

Hi, I’m having issues sending emails from my custom domain email address. When I send emails to Outlook addresses, I receive an “Undeliverable 505” error. However, emails send and receive correctly when I use Gmail. This is important for my business,Multiple MFA Methods

With SMS-based MFA methods being discontinued, there is now no way to have mutliple MFA methods. I'd like to add my zoho account on two seperate phones using the Google Authenticator app. In the https://accounts.zoho.com/home#multiTFA/modes you can onlyReuse Standalone Function

I noticed that there's a missing information in documentation to reuse a standalone function and it is because the parameters require an argument. Here is my code and it is working. response = invokeurl [ url: "https://people.zoho.com/api/v3/function/sample/execute"Domain renewals

Need to know how hoe to renew the domainMX shopify problem

hello, i added all MX values in my shopify DNS - it shows those values on the shopify panel + your toolkit. I tried to send some email and it works, however on my gmail it says they cant verify this email. When i try to answer into my domain's email -Best Way to Manage Email Notifications While Running a Strategy Website

I am currently managing a content-based website, and I use Zoho Mail for handling contact forms, user queries, and collaboration emails. One challenge I am facing is organizing incoming emails efficiently, especially when messages come from differentWhat is the maximum email domains ?

I help manage about 20 associations and I'm looking for a way to centralize them in one place. Does Zoho Mail pro or enterprise support 20-30 domains for 3-5 users each?Add to Workdrive filter

I'm trying to create a filter that will upload attachments in emails and the e-mail body to a folder in workdrive. I am able to do one or the other (attachment, or e-mail content), but not both. I first tried it using the "Email (EML) + attachment" option.Next Page