Job Summary API Response | Zoho DataPrep

This documentation explains the API response structure in detail, with clear field descriptions and JSON examples, helping users understand pipeline execution flows, troubleshoot issues, and track data lineage efficiently.

Top-level schema

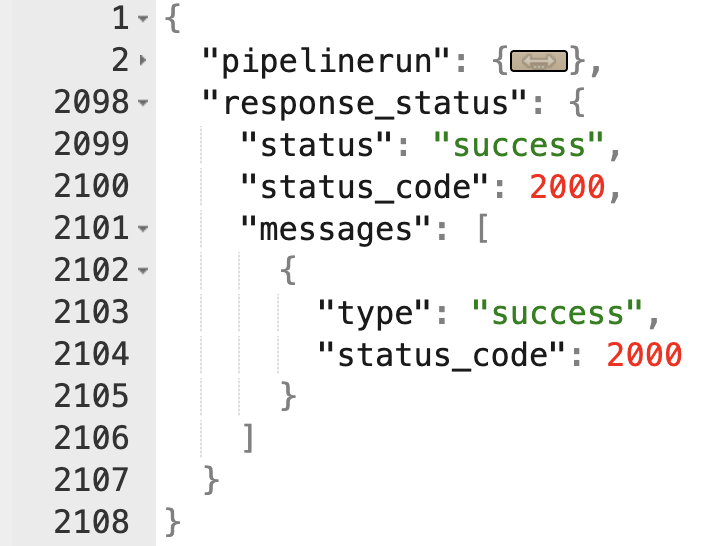

- {

- "pipelinerun": { ... },

- "response_status": {

- "status": "success",

- "status_code": 2000,

- "messages": [

- {

- "type": "success",

- "status_code": 2000

- }

- ]

- }

1. Response status

Field | Type | Meaning |

status | string | Response type: "success" or "failure" |

status_code | number | 2000 = success; 4000+ = error |

messages | array | List of messages about the API call |

messages[0].type | string | Message type (e.g., "success" or "error") |

messages[0].status_code | number | 2000 = success; 4000+ = error |

2. pipelinerun

This is the core object. It represents the full execution of a pipeline.

Common type field inside the pipeline run object.

Field | Type | Description |

id | string | Pipeline run ID |

execution_status | number | Pipeline run execution status 2= Created 0 = Running -2 = Stopped -3 = System killed |

type | number | Type of run: |

created_time | Unix timestamp in milliseconds | Pipeline run start time |

modified_time | Unix timestamp in milliseconds | Pipeline run modified time (Kill or stop) |

completion_time | Unix timestamp in milliseconds | Pipeline run completion time |

data_interval_start_time, | Unix timestamp in milliseconds | Data interval start time and end time |

Re-run count | number | Number of times re-run |

profile_output | boolean (true or false) | Internal usage key for output profiling |

skip_export_invalid_data | boolean (true or false) | Stop export if data quality drops below 100% This run setting allows you to control whether the export should continue if the dataset contains invalid values. Toggle ON (true) - The export will stop if the data quality is less than 100%. Toggle OFF (false) - The export will proceed regardless of data quality, even if invalid records exist. |

status | number | Pipeline run alive status |

storage_used | number (bytes) | Total storage used |

row_count | number | Total number of rows processed |

1. Pipelineaudit

Field | Type | Description |

id | String | Unique ID of the pipeline audit. |

is_committed | String | Indicates whether this audit corresponds to the published version of the pipeline. |

pipeline.id | String | Unique ID of pipeline. |

status | Number | Pipeline audit alive status |

2. Pipelinerunconfigaudit

It retains details such as the run type (for example, schedule, webhook run) and the run settings (for example, stop export if data quality is less than 100%).

Field | Type | Description |

id | String | Unique ID of the pipeline run configuration audit. |

status | Number | Status of the configuration audit |

skip_export_invalid_data | Boolean (true or false) | Stop export if data quality drops below 100% This run setting allows you to control whether the export should continue if the dataset contains invalid values. Toggle ON (true) - The export will stop if the data quality is less than 100%. Toggle OFF (false) - The export will proceed regardless of data quality, even if invalid records exist. |

type | Number | Type of pipeline run |

pipeline | Object | Parent pipeline linked to this configuration audit. |

pipeline.id | String | Unique ID of the pipeline. |

pipelinefile | Object | Downloaded file from the Output tab in the job summary. |

pipelinefile.id | String | Unique ID of the associated pipeline file. |

3. Created by

Field | Type | Description |

id | String | Unique ID of the user who created the run. |

zuid | String | Zoho User ID of the creator. |

username | String | Display name of the user. |

email_id | String | Email address of the creator. |

invited_time | String | Epoch timestamp of when the user was invited. |

profile | Object | Profile details of the creator. |

profile.id | String | Unique ID of the user profile. |

status | Number | Status of the user |

zsoid | String | Zoho Organization ID associated with the user. |

profilelink | String | URL to the user’s Zoho profile. |

4. Modified by

Field | Type | Description |

id | String | Unique id of the user who modified the run. |

zuid | String | Zoho User ID of the modified user. |

username | String | Display name of the modified user. |

email_id | String | Email address of the modified user. |

invited_by | String / Null | User ID of the inviter, if applicable. Example: null (when no inviter). |

invited_time | String | Epoch timestamp of when the modified user was invited. |

profile | Object | Profile information of the modified user. |

profile.id | String | Unique id of the profile. |

profile.name | String | Modified user's role. Example: "Org Admin" |

profile.is_default | Boolean | Whether this is the default profile for the user. |

profile.zuid | String / Null | Zoho User ID mapped to this profile. Example: null. |

profile.permission | String | Permission code for the profile. Example: "7FFFFFFF" |

status | Number | Status of the user |

zsoid | String | Zoho Organization ID of the user. |

profilelink | String | URL to the user’s Zoho profile. |

has_more_rows | Boolean Array | Internal flag for pagination. |

5. Pipelinetaskinstancemappers

Key = Child task instance ID

Value (Array) = Parent task instance IDs

Field | Type | Description | Example |

<task_instance_id> | Object | Each key represents a child task instance ID in the pipeline. | "14057000007569523": ["14057000007569519"] |

Value (Array) | Array | List of parent task instance IDs the child depends on. | "14057000007569515" - [ ] |

6. Pipelinetaskinstances

Field | Type | Description |

id | String | Unique ID of the pipeline stage instance. |

pipelinerun | Object | The pipelinerun task has the same structure as pipelinerun under the main object. |

pipelinetask | Object | The stage definition that this instance is based on. (explained below) |

execution_status | Number | Execution status of each stage. |

index | Number | Internal notes. |

created_time | String | When the stage instance was created. |

start_time | String | When execution started. |

completion_time | String | When execution finished. |

client_meta | JSON String | UI placement information in the pipeline builder. |

message | String/Null | System or log messages. |

operator_id | String | The operator used to execute this stage. |

retry_count | Number | Number of retries attempted. Example: 0. |

storage_used | String | Storage consumed (bytes). Example: "172". |

row_count | String | Rows processed. Example: "3". |

status | Number | Active status |

time_to_live | String | Expiry time for this instance. |

rawdatasetaudit | Object | Audit info about the dataset used in this stage. |

Pipeline task

Field | Type | Description |

id | String | Unique ID of the pipeline stage. |

name | String | Stage name (usually corresponds to a dataset or stage). Example: "public.contactnew". |

type | Number | Stage type identifier. |

pipeline | Object | The pipeline this stage belongs to. |

created_by | Object | Same structure as created_by under pipelinerun. |

created_time | Unix timestamp in milliseconds | Pipeline run start time. |

modified_by | Object | Same structure as modified_by under pipelinerun. |

modified_time | Unix timestamp in milliseconds | Pipeline run modified time (Kill or stop) |

status | Number | Indicates if the stage is active. Example: 1. |

latest_only | Boolean | Whether only the latest run should be considered. Example: false. |

depends_on_past | Boolean | If true, this stage only runs if its previous instance succeeded. Example: false. |

trigger_rule | String | Execution rule. Example: "ALL_SUCCESS". |

client_meta | JSON String/Null | UI placement information in the pipeline builder. |

weight | Number | Priority weight for scheduling. Example: 1. |

a. Raw dataset audit

This contains details about the imported dataset.

Field | Type | Description |

id | String | Unique id for the dataset audit. |

audit_time | String (timestamp) | Time when the dataset import was audited. |

is_referred | Boolean | Indicates whether this dataset was reused from a previous import. |

datasourceaudit | Object | Datasource audit ID of the source. |

rawdatasetconfigaudit | Object | Dataset config audit ID. |

rawdataset | Object | Details of the dataset being audited (explained below). |

status | Number | Audit status. |

error_message | String / null | Status Error message |

i) Rawdataset

Field | Type | Description |

id | String | Unique ID of the dataset. |

name | String | Dataset name. Example: "public.contactnew". |

replication_frequency | String/Null | How often does it replicates. Example: null. |

created_by | Object | Same structure as created_by under pipelinerun. |

created_time | Unix timestamp in milliseconds | Dataset imported time |

modtime | String/Null | Last modified time. Example: null. |

status | Number | Status of the dataset. Example: 1. |

pipelinedatasource | Object | The datasource linked to this dataset. (explained below). |

ii) Pipeline datasource

Field | Type | Description |

id | String | Unique ID of the datasource. |

name | String | Datasource name or identifier. |

replication_type | String | Replication strategy used. |

status | Number | Status of the datasource. |

project | Object | Workspace id that owns this datasource. |

connection | Object | Connection id used for accessing the datasource. |

b. Dataset audit

Field | Type | Description |

id | String | Unique id for this dataset audit. |

created_time | String (timestamp) | When the dataset audit was created. |

created_by | Object | User who triggered the audit (same structure as explained under pipelinerun.created_by). |

audit_time | String (timestamp) | When the dataset audit started. |

completion_time | String (timestamp) | When the dataset audit finished. |

execution_status | Number | Status of the audit execution. |

description | String/null | Optional description. |

dataset | Object | Dataset linked to this audit. (Explained below) |

exportcatalogaudit | Object / null | Target matching |

rulesetaudit | Object | Information about the applied ruleset. (Explained below) |

modtime | String (timestamp) | Last modified time for this audit. |

status | Number | Current status of the audit. |

type | Number | Internal notes. |

error_message | String / null | Error details if audit failed, otherwise null. |

datasetstate | Object | Current state of the dataset after audit. (Explained below) |

rules_count | Number | Number of rules applied in this dataset audit. |

i) Dataset

Field | Type | Description |

id | String | Unique dataset ID. |

name | String | Name of the dataset. Example: "contact". |

description | String / null | Optional description of the dataset. |

status | Number | Status of the dataset. |

is_ready | Boolean (true/false) | Whether the dataset is ready for use. |

is_pii_marked | Boolean | Whether the dataset has been flagged for containing Personally Identifiable Information (PII). |

project | Object | Workspace id this dataset belongs to |

selected_sample | Object | The sample id is selected for a quick preview |

ii) Rulesetaudit

Field | Type | Description |

id | String | Unique id of this ruleset audit. |

audit_time | String (timestamp) | When the ruleset audit was executed. |

is_committed | String | Whether the ruleset has been committed. |

ruleset | Object | The applied ruleset id |

status | Number | Status of the ruleset audit. |

rules_count | Number | Total number of rules applied in this audit. |

iii) Datasetstate

Field | Type | Description |

id | String | Unique id for this dataset state. |

datasetaudit | Object | Links back to the dataset audit id. |

execution_status | Number | Execution result. |

is_error | Boolean | Whether the state contains an error. |

error_message | String/null | Error details if is_error = true. |

c. Dataset export audit

Field | Type | Description |

id | String | Unique id of the export audit. |

dsauditstatefile | Object | Refers to the dataset state file id used in this export |

datasinkaudit | Object | Links to the datasink audit record id |

datasetexport | Object | Reference to the export configuration and the dataset involved. (explained below). |

datasetexportconfigaudit | Object | Audit record for the export configuration id |

created_time | String (timestamp) | When this export audit was created. |

error_message | String / null | Error details if export failed. |

info | String (JSON) | Extra info about export execution. Example: {"currentRetry":0}. |

execution_status | Number | Status of execution. 1 = Success |

progress | Number | Progress percentage. Example: 100. |

status | Number | Status of the audit record. |

type | Number | Internal notes |

datasetexportauditmetric | Object | Output Metrics recorded for this export (explained below). |

i) Dataset export

Field | Type | Description |

id | String | Unique id of the dataset export. |

dataset | Object | Dataset being exported (uses an already defined dataset). |

datasink | Object | Where the dataset is exported. |

created_by | Object | User who created the export (standard user structure). |

created_time | String (timestamp) | When export was created. |

modified_by | Object / null | Last user who modified the export. |

modtime | String / null | Last modified time. |

status | Number | Status of the export. |

datasetexportaudit | Object | Nested audit of this export -> { id, dsauditstatefile, datasinkaudit, datasetexport, datasetexportconfigaudit, created_by, etc. } (essentially self-referencing). |

ii) Datasink

Field | Type | Description |

id | String | Unique id of the datasink. |

status | Number | Status of the datasink. Example: 1 = Active. |

project | Object | The workspace id this datasink belongs to. |

connection | Object | Connection details used for export (see below). |

iii) Connection (inside datasink)

Field | Type | Description |

id | String | Connection identifier. |

name | String | Display name of the connection. |

connection_json | String (JSON) | Connection-specific info. |

type | String | Connection type code. |

created_by | Object | The user who created the connection |

owner | Object | Owner of the connection |

created_time | String (timestamp) | When the connection was created. |

modified_by | Object | Last user who modified the connection |

modtime | String (timestamp) | Last modified time. |

status | Number | Status of the connection. Example: 1 = Active. |

category | String / null | Category of the connection. |

base_type | String | Base service type. Example: "box". |

sub_type | String / null | Subtype of the connection if applicable. |

iv) Dataset export audit metric

Field | Type | Description |

id | String | Id of this metrics record. |

row_count | String (number as string) | Number of rows exported. |

col_count | String (number as string) | Number of columns exported. |

size | String (number as string) | Data size in bytes. |

status | Number | Status of metrics record. |

datasetexportaudit | Object | Links back to export audit -> { id, etc. }. |

quality | String (JSON) | Quality of the dataset. Example: {"valid":57,"invalid":0,"missing":151}. |

d. Datasource audit

Field | Type | Description |

id | String | Unique ID of this datasource audit. |

execution_status | Number | Execution state of the datasource audit. 1 = Success |

streams_info | String (JSON) | Import notes |

killed_batch_catalog_ids | String / null | IDs of any batch catalogs that were terminated during execution. |

completed_time | String (timestamp) | Audit completion time. |

status | Number | Status of the audit. Example: 1 = Active. |

type | Number | Type of audit. Example: 1 = Datasource. |

pipelinedatasource | Object | Reference to the datasource used. Includes its id, name, replication_type, created_by, project, and connection (same structure as explained under connection and user fields). |

error_message | String / null | Error details if execution failed. |

logs | Array | Internal notes |

7. Pipelinerunaudit

The Pipeline Run Audit shows the details of a pipeline run, including its settings, status, usage, pipeline, and workspace, how much data was processed, and the status of the run.

Field | Type | Description |

id | String | Unique ID of the pipeline run audit. |

created_time | String (timestamp) | The time when the run audit was created. |

modtime | String (timestamp) | Last modified time of the run audit. |

pipelinerun | Object | Contains details about the pipeline run. Structure is the same as explained under pipelinerun. |

status | Number | Pipeline run alive status |

execution_policy | Number | Execution policy internal |

execution_status | Number | Pipeline run execution status |

created_by | Object | User who created the run audit. Same structure as created_by. |

modified_by | Object | User who last modified the run audit. Same structure as modified_by. |

completion_time | String (timestamp) | Time when the run audit is completed. |

storage_used | Bytes | Storage consumed during this run. |

row_count | String | Number of rows processed in this run. |

pipeline | Object | Pipeline details (id, name, project, status). Same structure as the pipeline below. |

8. Pipeline

Represents the pipeline that was executed in the run.

Field | Type | Description |

id | String | Unique id of the pipeline. |

name | String | Name of the pipeline. Example: "My pipeline-1" |

description | String / Null | Description of the pipeline. Example: null if no description is provided. |

created_by | Object | Details of the user who created the pipeline (same structure as created_by under pipelinerun). |

created_time | String | Epoch timestamp when the pipeline was created. |

modified_by | Object | Details of the user who last modified the pipeline (same structure as modified_by under pipelinerun). |

modtime | String | Epoch timestamp when the pipeline was last modified. |

status | Number | Status of the pipeline |

is_ready | Boolean | Whether the pipeline is ready to run. |

depends_on_past | Boolean | Whether the pipeline run depends on past runs |

project | Object | The workspace that this pipeline belongs to. |

Project

Represents the workspace containing the pipeline.

Field | Type | Description |

id | String | Unique id of the workspace. |

name | String | Name of the workspace. Example: "RestAPI test" |

description | String / Null | Not in use |

created_by | Object | Details of the user who created the workspace. |

created_time | String | Epoch timestamp when the workspace was created. |

modified_by | Object | Details of the user who last modified the workspace. |

modtime | String | Epoch timestamp when the workspace was last modified. |

status | Number | Status of the workspace |

migration_status | Number | Migration status of the workspace. |

is_locked | Boolean | No longer in use. |

Zoho CRM Training Programs

Learn how to use the best tools for sales force automation and better customer engagement from Zoho's implementation specialists.

Zoho DataPrep Personalized Demo

If you'd like a personalized walk-through of our data preparation tool, please request a demo and we'll be happy to show you how to get the best out of Zoho DataPrep.

All-in-one knowledge management and training platform for your employees and customers.

You are currently viewing the help pages of Qntrl’s earlier version. Click here to view our latest version—Qntrl 3.0's help articles.

Zoho Sheet Resources

Zoho Forms Resources

Zoho Sign Resources

Zoho TeamInbox Resources

Related Articles

Zoho DataPrep - User Guide Overview

Zoho DataPrep is an advanced self-service ETL software. You can move large volumes of data from multiple data sources to various destinations to serve data analytics and data warehousing with exceptional data quality. You can also clean, transform ...Odoo connector for Zoho DataPrep [BETA]

Zoho DataPrep allows you to bring in data from Odoo, an ERP platform for managing business applications like accounting and inventory. With this integration, you can schedule regular imports, prepare your data, and streamline operations. With this ...Zoho DataPrep - FAQs

1. What is Zoho DataPrep? Zoho DataPrep is an advanced self-service data preparation tool that helps organizations model, cleanse, prepare, enrich and organize large volumes of data from multiple data sources to serve data analytics and data ...Zoho DataPrep MCP Server

Overview What is MCP? Model Context Protocol (MCP) is an open protocol that establishes the standardized manner in which applications can interact with Large Language Models (LLMs). An MCP allows you to carry out your desired workflow on your ...Zoho DataPrep's Home page

Dashboard Getting started The getting started section in the home page is shown to trial users, this shows you the various aspects to get started with Zoho DataPrep. You can take a Product tour to get a walkthrough and understand the end-to-end ETL ...